Filter news by tags :

01 March 2026

ARCHER2 Calendar

The Beehive Lantern of Porosity - the Hidden Architecture of ZIF-71 Dr Debayan Mondal, Multifunctional Materials and Composites (MMC) Laboratory, Department of Engineering Science, University of Oxford Harnessing the computational power of ARCHER2 alongside the CRYSTAL23 quantum-chemistry code, we unveil the hidden architectural marvel within the porous framework of ZIF-71....

01 February 2026

ARCHER2 Calendar

Lattice Boltzmann modelling of droplet equatorial streaming in an electric field Dr Geng Wang, Department of Mechanical Engineering, University College London In a strong electric field, when a low-viscosity droplet is placed in a medium with higher electric conductivity and permittivity, it forms a lens-like shape and continuously generates detached...

01 January 2026

ARCHER2 Calendar

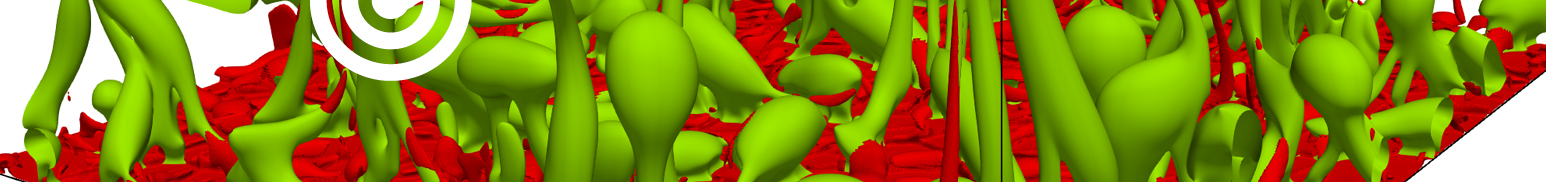

Formation of a Reverse Kármán Vortex Street in the Wake of a Morphing Wing Miss Hibah Saddal, Chandan Bose, Department of Aerospace Engineering, University of Birmingham * * * Winning Image, ARCHER2 Image and Video Competition 2025 * * * A fully flexible aerofoil, with varying flexibilities at the leading...

16 December 2025

ARCHER2 Support team

The ARCHER2 Service will observe the UK Public holidays and will be closed for Christmas Day, Boxing Day and New Year’s Day. We fully expect the ARCHER2 service to be available during these holidays but we will not have staff on the Service Desk to deal with any queries or...

16 December 2025

EPCC

At the beginning of December, the CAKE (Computational Abilities Knowledge Exchange) team went to Computing Insight UK (CIUK) 2025 in Manchester, which takes place in the first week of December every year and is sometimes jokingly called the “Christmas Party of the UK HPC community”. Read the post on the...

01 December 2025

ARCHER2 Calendar

Leading-edge vortex instability over a plunging swept wing Alexander Cavanagh, Autonomous Systems and Connectivity, University of Glasgow The image shows the flow field over a plunging swept wing and the vortices that are formed due to the motion. The colouring is by normalised velocity magnitude. Instabilities are present where the...

01 November 2025

ARCHER2 Calendar

Characterisation of the electron distribution of 2D materials and their adsorbates Dr Zhongze Bai, Mechanical Engineering, University College London Electron Localization Function (ELF) and Charge Density Difference (CDD) are important tools in quantum chemistry to analyse electron distribution, bonding properties and intermolecular interactions, which aid in studying chemical reactions, material...

21 October 2025

EPCC

ASiMoV-CCS is a new solver for CFD and combustion simulations in complex geometries. It was developed at EPCC with collaborators as part of the ASiMoV EPSRC Strategic Prosperity partnership. Read the post on the EPCC website

01 October 2025

ARCHER2 Calendar

Effects of "wake steering" on wind turbine flows. The upwind turbine on the right is rotated to steer its wake away from the downstream turbine increasing the overall efficiency. Dr Sébastien Lemaire, EPCC, University of Edinburgh * * * Winning Video, ARCHER2 Image and Video Competition 2024 * * *...

30 September 2025

Lorna Smith

We were pleased to host the ARCHER2 User Advisory Group at the Advanced Computing Facility (ACF) recently, for their regular UAG meeting. We were pleased to host the ARCHER2 User Advisory Group at the Advanced Computing Facility (ACF) recently, for their regular UAG meeting. The ARCHER2 User Advisory Group (UAG)...

24 September 2025

Anne Whiting

Many thanks to all ARCHER2 users who completed the User Survey this year. We know that when you get asked to review every pair of socks you buy, feedback and survey fatigue sets in very quickly. People who provided their contacts details will hear soon if they are one of...

05 September 2025

EPCC

Paul Clark, EPCC’s Director of HPC Systems, reviews the excellent progress made so far during the Advanced Computing Facility’s first ever major shutdown. Read the post on the EPCC website

01 September 2025

ARCHER2 Calendar

Global sea-surface waves Dr Lucy Bricheno, Marine Systems Modelling (Dept. Science and Technology), National Oceanography Centre This animation shows how wind driven ocean waves grow and move over the sea surface. The 'hot' colours red and orange show high waves, and areas with 'cold' colours (white and blue) are calm....

26 August 2025

EPCC

We are preparing for a major shutdown of our ACF site for the first time ever. Paul Clark, EPCC Director of HPC Systems, explains what we are doing and why. Read the post on the EPCC website

01 August 2025

ARCHER2 Calendar

Turbulent times Eric Breard, School of Geosciences, University of Edinburgh * * * Winning Image and overall competition winning entry, ARCHER2 Image and Video Competition 2024 * * * The image shows a reproduction of the June 3rd, 2018 eruption at Fuego volcano, Guatemala. The volcanic plume reached around 15...

01 July 2025

ARCHER2 Calendar

Active turbulence in microswimmer suspension. Dr Giuseppe Negro, ICMCS, School of Physics and Astronomy, University of Edinburgh The colour represents the largest eigenvalue of the Q-tensor, that gives the degree of local microorganism alignment. Resolution: 512 Fourier modes in each spatial direction comprising in total 1.7*E+9 degrees of freedom. The...

01 June 2025

ARCHER2 Calendar

A 'shaking' droplet: mapping lithium atom trajectory Dr Ruitian He, Department of mechanical engineering, University College London This image depicts a slice of a 'shaking' droplet, showing large fluctuations due to atom motion and gas release during the droplet explosion. We conducted reactive molecular dynamics simulations using LAMMPS to visualize...

27 May 2025

EPCC

We were very pleased to welcome over a hundred attendees to Edinburgh this month for this year’s ARCHER2 Celebration of Science. Read the post on the EPCC website

01 May 2025

ARCHER2 Calendar

Pulsed-power driven magnetic reconnection Dr Nikita Chaturvedi, Department of Physics, Imperial College London Magnetic reconnection occurs when anti-parallel magnetic field lines undergo a rapid change in topology. This is thought to occur in the collisional, radiative regime in black hole accretion disks. This simulation is of 'exploding wire arrays' driven...

01 April 2025

ARCHER2 Calendar

Dramatic ionization from the nitrogen dimer during ultrafast laser irradiation Mr Dale Hughes, Centre for Light-Matter Interactions, School of Maths and Physics, Queen's University Belfast Using the ARCHER2 compute capabilities, we have carried out precision calculations to ascertain the behavior of a Nitrogen molecule subject to intense laser fields, on...

01 March 2025

ARCHER2 Calendar

Separated vortex ring above a three-dimensional porous disc Dr Chandan Bose, Aerospace Engineering, School of Metallurgy and Materials, University of Birmingham * * * Winning Early Career entry, ARCHER2 Image and Video Competition 2024 * * * The primary motivation of studying the wake of a permeable disc stems from...

18 February 2025

EPCC

What could be better than hearing you have been awarded a new project on ARCHER2!? Exciting and thrilling as the news may be, if it is your first time managing a new HPC project, then the processes involved in managing the resources and getting all your team set up and...

01 February 2025

ARCHER2 Calendar

Modelling hydrogen deposition: assessing climate impacts of interactive methane and hydrogen Dr Megan Brown, Yufus Hamied Department of Chemistry, University of Cambridge Atmospheric hydrogen indirectly contributes to climate change by extending the lifetime of methane and increases the production of other greenhouse gases. Hydrogen lifetime in the atmosphere is poorly...

01 January 2025

ARCHER2 Calendar

Wishing everyone in the ARCHER2 community a wonderful 2025 Icequakes: vibrating supercooled water in the nanopore Pengxu Chen, School of Engineering, The University of Edinburgh Ice nucleation within supercooled liquid is the process where the first few ice crystals, comprising a few molecules, begin to form. The accompanying image vividly...

17 December 2024

Alice Pettitt

Alice Pettitt, a researcher working on their PhD, has written about how the ARCHER2 service supported their work and development. Why did you need to use ARCHER2 for your research (need for computational research and that size of machine)? For my PhD project, I characterised a protein called ORF6 from...

13 December 2024

ARCHER2 Support team

The ARCHER2 Service will observe the UK Public holidays and will be closed for Christmas Day, Boxing Day and New Year’s Day. We fully expect the ARCHER2 service to be available during these holidays but we will not have staff on the Service Desk to deal with any queries or...

01 December 2024

ARCHER2 Calendar

Silico model for assessing individual responses to irregular heart rhythm treatments. Carlos Edgar Lopez Barrera, Queen Mary University of London, SEMS * * * Winning Early Career entry, ARCHER2 Image and Video Competition 2023 * * * This video demonstrates the creation of a personalized in silico model for assessing...

28 November 2024

Andy Turner

In this blog post we report on work we have been doing to estimate greenhouse gas (GHG) emissions associated with the ARCHER2 service, describe the methodology we use to estimate emissions from the service and the new tools we have developed to help users estimate the emissions from their use...

19 November 2024

EPCC

As part of our Continiual Service Improvement, we are moving to a new system for registering for our ARCHER2 Training Courses. Previously, all course registrations have been done via a web form, and once registration is approved, then an invitation is sent out with details of how to register in...

12 November 2024

EPCC

We are very pleased to announce a new ARCHER2 GPU Driving Test. This offers a simple and fast access route to try out the ARCHER2 AMD GPU Development Platform first hand and find out what benefits GPUs can bring to your work. The Driving Test The ARCHER2 Driving Test is...

05 November 2024

EPCC

The new “Art in HPC” session at the SC24 conference will include a video by EPCC’s Sebastien Lemaire. Here he writes about the project. Read the post on the EPCC website

01 November 2024

ARCHER2 Calendar

Fully general evolution of a CFT field dual to gravity outside of a rotating black hole in anti-de Sitter spacetime Dr Lorenzo Rossi, Queen Mary University of London, School of Mathematical Sciences According to the celebrated AdS/CFT, gravitational physics in a certain spacetime called anti-de Sitter (AdS) is dual to...

08 October 2024

EPCC

Gavin Pringle introduces their eCSE project using GeoChemFoam on ARCHER2 for pore-scale modelling. Read the post on the EPCC website

01 October 2024

ARCHER2 Calendar

NACA0012 Turbulent Flow at 12 Degrees Angle of Attack Dr Guglielmo Vivarelli & Dr Moshen Lahooti, Imperial College London, Aeronautical Engineering & Newcastle University The image shows the flow transitioning to turbulent right at the beginning of the NACA0012 aerofoil at 12 degrees angle of attack and a Reynolds number...

01 September 2024

ARCHER2 Calendar

The Galewsky Jet with a moving mesh Dr Jack Betteridge, Imperial College London, Mathematics This video shows a simulation of a jet stream around the North Pole over the period of one week. The rotating shallow-water equations are solved on the surface of a sphere, using the same numerical methods...

01 August 2024

ARCHER2 Calendar

Flow past a succulent-inspired cylinder Dr Oleksandr Zhdanov, University of Glasgow, James Watt School of Engineering Unlike humans and animals, plants are sessile and cannot seek shelter from wind. Most plants rely on reconfiguration to reduce the wind loadings they experience. However, this strategy is not possible for tall arborescent...

09 July 2024

Anne Whiting EPCC

We are delighted to announce we have passed our annual audit against three important standards in quality management, information security management, and business continuity management. Read the post on the EPCC website

01 July 2024

ARCHER2 Calendar

Revealing Real-time Attosecond Dynamics in Xenon Lynda Hutcheson, Queen's University Belfast Advances in laser and computer technology allow us to investigate dynamics on the attosecond (10^(-18) s) timescale: the natural timescale of electronic motion. Using ARCHER2, we perform state-of-the-art simulations with the R-matrix with time-dependence (RMT) codes, treating interactions between...

01 June 2024

ARCHER2 Calendar

Mirroring a Pyroclastic Flow Experiment using Large-Eddy Simulation Dr Eric Breard, School of Geoscience, University of Edinburgh * * * Winning Video, ARCHER2 Image and Video Competition 2023 * * * The image and video are derived from a Large-Eddy Simulation of a pyroclastic flow, consisting of a hot mixture...

13 May 2024

Andy Turner (EPCC)

Thursday 14 March 2024 saw the first of the new, regular ARCHER2 Capability Days. The Capability Day facility proved hugely popular, was very oversubscribed and was extremely successful. This blog post reviews the first ARCHER2 capability Day and describes the improvements that have been made for the second Capability Day...

07 May 2024

EPCC

Organiser Weronika Filinger gives the background to this ISC’24 Birds of a Feather session. Read the post on the EPCC website

01 May 2024

ARCHER2 Calendar

Gravity current propagating past a cylinder Peter Brearley, Imperial College London, Department of Aeronautics Gravity currents are fluid flows driven by density differences, causing the denser fluid to propagate across a surface through the less dense fluid. They are the means of a range of oceanic, atmospheric and geological flows...

15 April 2024

Eleanor Broadway

Annually, on the 8th of March, the world celebrates International Women’s Day (IWD), a day dedicated to highlighting and celebrating the contributions of women to society. As an organisation dedicated to increasing the visibility of women in HPC and raising awareness of the positive impact diversity has on progress in...

03 April 2024

EPCC

Have you ever wanted to go on a tour of ARCHER2? Well, now you can! Virtually. Spyro has produced a hi-res virtual tour of the ARCHER2 computer room for everyone to explore. Visit our Virtual Tour page to find out more

01 April 2024

ARCHER2 Calendar

Oil into a ketton carbonate rock saturated with water Dr Liang Yang, Cranfield University, Energy and Sustainability This simulated a pure injection drainage process with a non-wetting phase using the Open-source Taichi-LBM3D code for the Ketton carbonate, supported by the eCSE Archer2 grant: 'MPI implementation of open-source fully-differentiable multiphase lattice...

28 March 2024

Nick Brown (EPCC), Ed Hone (University of Exeter), Andy Turner (EPCC), Marion Weinzierl (University of Cambridge)

On March 14, UK HPC RSEs came together in an online meetup to talk about the HPC RSE community, plan what comes next, and discuss relevant technical topics around High Performance Computing and Research Software Engineering. We had 42 registrations for the event, with a maximum of 29 people in...

19 March 2024

Clair Barrass EPCC

The ARCHER2 Celebration of Science was an opportunity to meet up with a wide range of people involved in the ARCHER2 community, and to hear a range of presentations by some of the Consortia Leaders, PIs and other researchers benefitting from access to ARCHER2, and also from some of those...

01 March 2024

ARCHER2 Calendar

Dance with Fire Dr Jian Fang, Scientific Computing Department, STFC Daresbury Laboratory This video illustrates the interaction between turbulence and a hydrogen flame. Turbulence fluctuations disrupt the combustion, by stretching and bending the flame surface and altering the chemical reaction inside the reaction zone. In the meantime, the high temperature...

01 February 2024

ARCHER2 Calendar

Unsteady Flow Over Random Urban-like Obstacles: A Large Eddy Simulation using uDALES 2.0 Codebase Dr Dipanjan Majumdar, Imperial College London, Civil and Environmental Engineering Department uDALES stands as an open-source large-eddy simulation framework, specifically designed to tackle unsteady flows within the built environment. It is capable of simulating airflow, sensible...

01 January 2024

ARCHER2 Calendar

Wishing everyone in the ARCHER2 community a wonderful 2024 Modelling proton tunnelling in DNA replication Max Winokan, University of Surrey, Quantum Biology DTC * * * Winning Image and overall competition winning entry, ARCHER2 Image and Video Competition 2023 * * * Proton transfer between the DNA bases can lead...

14 December 2023

ARCHER2 Support team

The ARCHER2 Service will observe the UK Public holidays and will be closed for Christmas Day, Boxing Day and New Year’s Day. We fully expect the ARCHER2 service to be available during these holidays but we will not have staff on the Service Desk to deal with any queries or...

01 December 2023

ARCHER2 Calendar

Axial velocity contours showing wakes from stator and rotor blades of a DLR research compressor Dr Arun Prabhakar, University of Warwick, Department of Computer Science The visualization is produced from a simulation of a research compressor, RIG250 from the German Aerospace Centre(DLR), using a mesh consisting of 4.58 billion elements....

30 November 2023

Marion Weinzierl (ICCS, University of Cambridge), Ed Hone (University of Exeter) Andy Turner (EPCC, University of Edinburgh)

After the successful HPC and RSE workshop “Excalibur RSEs meet HPC Champions” which ran as a satellite event on the Friday after RSECon22 in Newcastle, the organisers decided to run a similar event this year. Read the blog post from Marion, Ed and Andy

28 November 2023

Clair Barrass EPCC, Andy Turner EPCC

Working with HPC can be exciting, innovative and rewarding. But it can also be complex, frustrating and challenging. Andy Turner is EPCC’s CSE Architect, he works with RSEs, UK HPC-SIG, DiRAC, The Carpentries and various user communities to share and improve HPC epertise. We asked Andy for his Top 10...

01 November 2023

ARCHER2 Calendar

Magnetic field filaments generated by the current filamentation instability Dr Elisabetta Boella, Lancaster University, Physics Department One of the long-standing questions in plasmas physics, the science that studies ionized gases called plasmas, regards the origin and evolution of magnetic field in plasmas where such field is initially absent. The question...

01 October 2023

ARCHER2 Calendar

Scattering of waves by turbulent vortices in geophysical fluids Dr Hossein Kafiabad, School of Mathematics, University of Edinburgh When the waves travel in the atmosphere and ocean interior, they get scattered by the vortices leading to changes in their direction and wavelength. These changes are not random and can be...

19 September 2023

Anne Whiting EPCC

What happens once every two years, no one quite knows when, though there is a lot of speculation, and no one know what form it will take? The EPCC ARCHER2 business continuity and disaster recover (BCDR) test. This tests how well the ARCHER2 team recognises a major scenario, responds to...

12 September 2023

Clair Barrass EPCC

RSECon23 was held this year in sunny Swansea 5th-7th September. Whilst my work at EPCC is not actually that of RSE, it is certainly RSE-adjacent; I support the work of RSEs both here in EPCC and also around the country (sometimes even around the world). My roles include ARCHER2, Cirrus...

01 September 2023

ARCHER2 Calendar

One year in the southwestern Indian Ocean Noam Vogt-Vincent, Department of Earth Sciences, University of Oxford * * * Winning Video, ARCHER2 Image and Video Competition 2022 * * * This video shows one year of sea-surface temperatures from WINDS-C, a 1/50° (c. 2km) resolution ocean model that covers almost...

01 August 2023

Dr Chris Wood

The Nuffield Research Placements (NRP) programme provides hands-on research projects for 16 and 17-year-old school students. EPCC is currently supervising three student visitors. Read the full post on the EPCC blog page

01 August 2023

ARCHER2 Calendar

Maternal blood flow through the intervillous space of human placenta Dr Qi Zhou, The University of Edinburgh, School of Engineering, Institute for Multiscale Thermofluids A snapshot of the maternal blood flow simulated using the immersed-boundary-lattice-Boltzmann method as a suspension of deformable red blood cells through the extravascular intervillous space of...

20 July 2023

Ben Morse

EPCC’s Ben Morse introduces the new HPC Summer School fo undergraduate students. Read the full post on the EPCC blog page

13 July 2023

Ben Morse

EPCC’s Ben Morse reviews EPCC attendance at this year’s The Big Bang Fair, one of the UK’s largest science and technology outreach events. Read the full post on the EPCC blog page

01 July 2023

ARCHER2 Calendar

Cloud Development - Cross-section View Domantas Dilys, University of Leeds, School of Earth and Enviroment In the animation, an idealised cloud is shown, which is produced by a rising warm and moist air mass. There are seven timesteps, showing cloud development over time. Simulation was produced using a revolutionary parcel-based...

20 June 2023

James Richings, Sebastien Lemaire and Rui Apóstolo

The Service Desk is often the first point of contact between the Service team and the outside world. We asked a couple of recent recruits to the Service Desk team to share their experience and insights into this very important role. There are two shifts per day 8am-1pm and 1pm-6pm,...

12 June 2023

Joseph Lee

The field of quantum computing is developing at a rapid pace, with exciting developments in every sub-field including hardware development and algorithm design. Read the full post on the Cirrus blog page

01 June 2023

ARCHER2 Calendar

Expiratory particle dispersion by turbulent exhalation jet during speaking Aleksandra Monka, University of Birmingham, Department of Civil Engineering * * * Winning Image and overall competition winning entry, ARCHER2 Image and Video Competition 2022 * * * The image reveals the extent of expiratory particle dispersion by the turbulent exhalation...

01 May 2023

ARCHER2 Calendar

Simulation of 3D calving dynamics at Jakobshavn Isbrae Iain Wheel, University of St Andrews, School of Geography and Sustainable Development Calving is the breaking off of icebergs at the front of tidewater glaciers (those that flow into the sea). It accounts for around half of the ice mass loss from...

01 April 2023

ARCHER2 Calendar

Proton tunnelling during DNA strand separation Max Winokan, University of Surrey, Quantum Biology DTC Proton transfer between the DNA bases can lead to mutagenic Guanine-Cytosine tautomers. Over the past several decades, a heated debate has emerged over the biological impact of tautomeric forms. In our work, we determine that the...

01 March 2023

ARCHER2 Calendar

Flow within and around a large wind farm Dr Nikolaos Bempedelis, Imperial College London, Department of Aeronautics * * * Winning Early Career entry, ARCHER2 Image and Video Competition 2022 * * * Modern large-scale wind farms consist of multiple turbines clustered together in wind-rich sites. Turbine clustering suffers some...

13 February 2023

Laura Moran EPCC

This technical report by Laura Moran and Mark Bull from EPCC investigates how appropriate the Rust programming language is for HPC systems by using a simple computational fluid dynamics (CFD) code to compare between languages. Rust is a relatively new programming language and is growing rapidly in popularity. Scientific programming...

01 February 2023

Lorna Smith

Many of the articles about ARCHER2 contain a statement such as “ARCHER2 is hosted and operated by EPCC at the University of Edinburgh”. But what does this actually mean? This recent article takes a look at what is involved.

01 February 2023

ARCHER2 Calendar

The self-amplification and the structured chaotic sea Juan Carlos Bilbao-Ludena, Imperial College London The picture shows the instantaneous generation of strain (light blue) by the mechanism of self-amplification of strain (purple contour) in a turbulent flow generated by a wing. A nonlinear process distinctive to turbulence. The contour slices show...

18 January 2023

Thomas Hicken, and eCSE team

The ARCHER2 eCSE programme provides funding to carry out development of software used by the ARCHER2 user community. As part of our commitment to encouraging Early Career Researchers and to developing their skills and experience, we periodically offer the opportunity for a small number of such researchers to attend the...

16 January 2023

Eleanor Broadway

In November 2022, myself and William Lucas attended SC22 in Dallas to present an invited talk on behalf of the ARCHER2 CSE team at the ‘RSEs in HPC’ technical program workshop. SC, or the International Conference for High Performance Computing, Networking, Storage and Analysis, is an annual international conference which...

10 January 2023

Calum Muir, ACF team

EPCC’s Advanced Computing Facility (ACF) delivers a world-class environment to support the many computing and data services which we provide, including ARCHER2. This recent article takes a behind-the-scenes look at some of the activities the ACF team undertakes to provide the stable services our users expect.

04 January 2023

ARCHER2 Calendar

Acetylene Molecule subject to intense ultra-fast laser pulse, time evolution of electron probability, sound Dale Hughes, Queen's University Belfast, Physics The movie is a straightforward plot of electron density, derived from substantial Time-Dependent Density Functional Theory calculations carried out in recent months on ARCHER2. The sound is derived from the...

13 December 2022

ARCHER2 Support team

The ARCHER2 Service will observe the UK Public holidays and will be closed for Christmas Day, Boxing Day and New Year’s Day. Because Christmas Day and New Year’s Day fall on Sundays, we will take the official Substitute Day holidays on the 27th and 2nd. We fully expect the ARCHER2...

12 December 2022

Andy Turner (EPCC)

On the 12 December 2022 the default CPU frequency on ARCHER2 compute nodes was set to be 2.0 GHz. Previously, the CPU frequency was unset which meant that the base frequency of the CPU, 2.25 GHz, was almost always used by jobs running on the compute nodes. In this post,...

08 December 2022

Kris Tanev (EPCC)

Computing Insights UK 2022 was the first conference I attended ever since I began my journey in HPC. It took place in Manchester with the main theme being “Sustainable HPC”. During my two days there I was able to speak to some great professionalists and like-minded people. I have learned...

15 November 2022

Anne Whiting EPCC

We are pleased to announce that we have recently passed a flurry of ISO certifications. We had a combined external audit for ISO 9001 Quality and 27001 Information Security from our certification body DNV in September. This looked at how we run the ARCHER2 and Cirrus services on the ISO...

08 November 2022

Juan Rodriguez Herrera

Training is one of the elements that the ARCHER2 service provides to users. Our training programme is comprised of face-to-face and online courses, that range from introductory to advanced levels. We have scheduled several courses for the remaining of 2022. The list can be found as follows: ARCHER2 for Package...

25 October 2022

ARCHER2 Support team

We are very pleased to launch the new ARCHER2 support staff page where you can find pictures and a short bio of all the staff helping to keep the ARCHER2 service running. Whether you are trying to find who has been helping you on the Servicedesk, or trying to remember...

10 October 2022

Andy Turner (EPCC)

One of the big benefits of being a research software engineer (RSE) providing general support for a compared to a researcher or being focussed on a specific project is the ability to take a step back from the specific aspects of particular software and look at the more global view...

04 October 2022

ARCHER2 eCSE team

We have now started to publish the Final Reports from completed Embeded CSE (eCSE) Projects You can read the reports on the eCSE Final Project page

03 October 2022

ARCHER2 Calendar

Particles surf on plasma waves excited by high-power lasers Nitin Shukla, Instituto Superior Technico * * * Winning Image and overall winner, ARCHER2 Image and Video Competition 2020 * * * Particle accelerators are fundamental tools for science and society, enabling scientific discoveries and providing unprecedented insights into fundamental propterties...

08 September 2022

Clair Barrass EPCC

The ARCHER2 Image competition is currently open for 2022. Last years overall winning entry was the Early Career video entry: Sea surface temperature (colour) and ice concentration (grey shades) in the Greenland-Scotland Ridge region. Dr Mattia Almansi, Marine Systems Modelling - National Oceoanography Centre The video shows the evolution of...

30 August 2022

Clair Barrass EPCC

The ARCHER2 Image competition is currently open for 2022. Last years winning video was: Shock wave interaction with cavitation bubble. Dr Panagiotis Tsoutsanis, Centre for Computational Engineering Sciences - Cranfield University Interaction of a shock wave moving with Mach=2.4 and a gas-filled water bubble. These types of cavitation-bubbles can be...

22 August 2022

Clair Barrass EPCC

The ARCHER2 Image competition is currently open for 2022. Last years winning image was: Acoustic field (yellow and blue) with overlapped velocity isosurface (red) obtained with a coupled LES-High order Acoustic coupled solver of an installed jet case Dr Miguel Moratilla-Vega, Loughborough University/Aeronautical and Automotive Engineering Department The image reveals...

03 August 2022

Alexei Borissov EPCC

There are a number of ways to get access to ARCHER2 (see https://www.archer2.ac.uk/support-access/access.html), and all of the ways that provide a significant amount of compute time require the completion of a Technical Assessment (TA). TAs are meant to ensure that it is technically feasible to run the applications users have...

02 August 2022

Stephen Farr EPCC

EPCC provides a variety of training courses as part of the ARCHER2 national supercomputing service. These include introductory, advanced, and domain-specific options. Stephen Farr reports on a recent GROMACS course delivered as part of the ARCHER2 Training. A course which we have recently delivered is “Introduction to GROMACS”. GROMACS is...

18 May 2022

EPCC

The Service Desk team have helped more than a few people get from ‘zero’ to ‘HPC whizz’, so here we summarise all the useful information we have compiled over the years. This isn’t meant as a how-to guide, but a signpost to all the resources and materials which we think...

02 May 2022

Anne Whiting EPCC

If you run a data centre building full of expensive kit used to run a variety of services including ARCHER2, how do you minimise the chances of something negatively impacting the services you run, and how do you decide what to work on first if something does happen that impacts...

10 March 2022

Thomas Blyth EPCC

EPCC provides world-class supercomputing and data facilities and services for science and business. We are a leading centre in the field, renowned globally for research, innovation and teaching excellence. EPCC has three key foundations: the hosting, provision and management of high performance computing (HPC) and data facilities for academia and...

03 March 2022

Eleanor Broadway EPCC

In this blog, I will show results for the optimisation and tuning of NAMD and NEMO based on the ARCHER2 architecture. We looked at the placement of parallel processes and varying the runtime configurations to generalise optimisations for different architectures to guide users in their own performance tests. We found...

14 February 2022

Nick Brown EPCC

In December EPCC was involved in attending and contributing to Computing Insight UK (CIUK) which was held in Manchester over two days. With players from across UK academia and industry, this annual conference focusses on the UK’s contribution to HPC and is a great opportunity to hear about new trends,...

07 February 2022

Andy Turner (EPCC)

In this blog, I will introduce how we collect software usage data from Slurm on ARCHER2, introduce the sharing of this data on the ARCHER2 public website and then have a look at differences (or not!) in software usage on ARCHER2 between two months: December 2021: the initial access period...

24 January 2022

ARCHER2 Training Team

We are delighted to announce that the ARCHER2 Driving Test is now available. The ARCHER2 Driving Test is an online assessment tool which allows those new to ARCHER2 to demonstrate that they are sufficiently familiar with ARCHER2 and HPC to start making use of it. It is suitable for anyone...

14 January 2022

George Beckett EPCC

To keep the ARCHER2 National HPC Service running around the clock requires specialised staff, covering everything from the Service Desk and science support, through to hardware maintenance and data-centre hosting, alongside third-party suppliers for power, networking, accommodation, and so on. Coordinating these elements is a complex task, even in normal...

22 November 2021

Andy Turner (EPCC)

The ARCHER2 full system was opened to users on the morning of Monday 22 November 2021. In this blog post I introduce the full system and its capabilities, and look forward to additional developments on the service in the future. TL;DR For those who just want to get stuck in...

30 September 2021

Anne Whiting EPCC

EPCC are delighted to be able to announce that we have passed our ISO 9001 Quality and 27001 Information Security external audits with flying colours. We put the highest importance on service delivery and secure handling of customer data throughout the year. Despite this, it is still a nerve racking...

29 September 2021

Anne Whiting EPCC

In spring 2020 EPCC became one of the very few organisations in the UK to become accredited data processors under the Digital Economy Act (DEA), one of exactly 8 accredited data processors in the UK! This was for the hosting and technical management of the National Safe Haven. EPCC is...

21 September 2021

David Henty EPCC

We have all become accustomed to having a wide range of pre and post-processing tools available to us on our laptops, which can make working on the login nodes of a large HPC system such as ARCHER2 rather inconvenient if your favourite tools aren’t available. On something fairly standard like...

25 August 2021

Michael Bareford (EPCC)

This blog post follows on from “HPC Containers?”, which showed how to run a containerized GROMACS application across multiple compute nodes. The purpose of this post is to explain how that container was made. We turn now to the container factory, the environment within which containers are first created and...

06 August 2021

Michael Bareford (EPCC)

Containers are a convenient means of encapsulating complex software environments, but can this convenience be realised for parallel research codes? Running such codes costs money, which means that code performance is often tuned to specific supercomputer platforms. Therefore, for containers to be useful in the world of HPC, it must...

28 July 2021

Anne Whiting EPCC

This week the final cabinets to complete the full ARCHER2 system have arrived onsite in Edinburgh on multiple trucks. The boxes were so large that doors had to be removed. All cabinets are now safely in place, with water cooling enabled. Further work to integrate them into the system is...

15 July 2021

Kieran Leach (EPCC)

An HPC service such as ARCHER2 manages thousands of user-submitted jobs per day. A scheduler is used to accept, prioritise and run this work. In order to control how jobs are scheduled all schedulers have features for defining the manner in which work is prioritised. On the ARCHER and ARCHER2...

24 June 2021

Lorna Smith

Today saw a significant milestone in our move towards our full 23 cabinet ARCHER2 system. A small group of CSE staff gained access to the main HPE Cray EX supercomputing system today and are currently putting it through its paces. Andy, William, David, Adrian, Julien and Kevin will be testing...

08 June 2021

Juan Herrera EPCC

The first year of the ARCHER2 service has been very challenging, mainly due to the COVID-19 pandemic. Nonetheless, we have successfully delivered a fully online training programme. Since April 2020, a total of 66 days of training were delivered under the ARCHER2 service. We have used Blackboard Collaborate software for...

01 June 2021

Rebecca How EPSRC

ARCHER2 is funded by UKRI through two partner councils, EPSRC and NERC, who started building the case for the system back in 2016. Rebecca How from EPSRC’s Research Infrastructure team joined the ARCHER2 project in January 2019, taking over the Project Manager role for the upcoming service, while also acting...

01 June 2021

Mark Bull (EPCC)

Quite a few application codes running on ARCHER2 are implemented using both MPI and OpenMP. This introduces an extra parameter that determines performance on a given number of nodes - the number of OpenMP threads per MPI process. The optimum value depends on the application, but is also influenced by...

19 May 2021

Andrew Turner (EPCC)

Back in February, I reviewed the usage of different research software on ARCHER over a large period of its life. Now we have just come to the end of the first month of charged use on the ARCHER2 4-cabinet system I thought it would be interesting to have an initial...

11 May 2021

Ralph Burton, Stephen Mobbs, Barbara Brooks, James Groves.

National Centre for Atmospheric Science (NCAS), UK

In the evening of March 19th 2021 a volcanic eruption started in Fagradalsfjall, Iceland. Although the episode posed no threat to aviation (no ash was produced), significant amounts of volcanic gases were (and still are being) released. Such gases can cause respiratory problems, and if the concentrations are high enough,...

06 May 2021

Eleanor Broadway (EPCC)

With the end of the ARCHER service in January 2021, the ARCHER2 4-cabinet pilot system has now been operating as the national service for three months. A new architecture, programming environments, tools and scheduling system is a new challenge for users to experiment with and discover techniques to achieve optimal...

12 April 2021

Kieran Leach EPCC

The HPC Systems Team provides the System Development and System Operations functions for ARCHER2 - but who are we and what do we do? We are a team of 15 System Administrators and Developers who work to deploy, manage and maintain the services and systems offered by EPCC, as well...

11 March 2021

Kieran Leach (EPCC)

We recently received the main ARCHER2 hardware at the ACF and our team recorded the process of installation as this exciting new system was deployed. You’ll see large “Mountain” cabinets being deployed each of which holds 256 Compute Nodes with 128 CPU cores each as well as a number of...

04 March 2021

Anne Whiting (EPCC)

It seems strange to be thinking about how to justify investment in ARCHER3 and beyond, with ARCHER2 not fully in service yet, but it is never too early to start planning this. ARCHER generated a significant amount of world-leading science and we fully anticipate ARCHER2 will as well. It is...

19 February 2021

Josephine Beech-Brandt (EPCC)

It’s been a busy week at the Advanced Computing Facility (ACF) with the arrival of the remaining ARCHER2 cabinets. The long journey started from the HPE Cray factory in Chippewa Falls, Wisconsin (birthtown of Seymour Cray) before arriving in Prestwick airport. It took three separate journeys of four lorries to...

11 February 2021

Clair Barrass (EPCC)

Having said Farewell to ARCHER, we invite you to try our short quiz, to see how much you can remember about the service.

09 February 2021

Catherine Inglis (EPCC)

The ARCHER2 eCSE programme provides funding to carry out development of software used by the ARCHER2 user community. As part of our commitment to encouraging and developing Early Career Researchers, we offer a small number of early career researchers the opportunity to attend the eCSE Panel Meeting as observers. The...

05 February 2021

Josephine Beech-Brandt (EPCC)

Last week marked the end of the ARCHER Service after seven years. You may have heard some statistics over the last week about ARCHER but I wanted to tell you some from the helpdesk. During the lifetime of ARCHER, the User Support and Systems teams (SP) have resolved 57,489 contractual...

04 February 2021

Andy Turner (EPCC)

Now that the ARCHER service has finished, I thought it would be interesting to take a brief look at the use of different research software on the service over its lifetime. ARCHER was in service for over 7 years: from late 2013 until its shutdown in early 2021. Over the...

03 February 2021

Clair Barrass (EPCC)

Back in February 2015, a little over a year after the ARCHER service began, EPCC launched an entirely new and innovative access route, for users to get time on ARCHER via a “Driving Test” The idea behind this was to help those who had never used ARCHER, or possibly any...

02 February 2021

Lorna Smith

As the ARCHER service was switched off, our colleagues at the ACF photographed and recorded ARCHER’s final moments. With a particular thank you to Connor, Aaron, Jordan, Craig and Paul at the ACF and to Spyro for putting this video together, we give you ARCHER’s final moment. It is best...

27 January 2021

Lorna Smith (EPCC)

At 8am this morning the ARCHER service was switched off. Funded by EPSRC and NERC, this sees the end of a remarkable service, a service that has acted as the UK’s National HPC Service for the last 7 years. Entering service at the end of 2013, just over 5.6 million...

16 December 2020

Andy Turner

We are currently in (or have recently finished, depending on when you read this) the Early Access period for the ARCHER2 service. During this period, users nominated by their peers and the ARCHER2 teams at EPCC and HPE Cray have had access to the ARCHER2 4-cabinet system. The Early User...

19 November 2020

Anne Whiting EPCC

With ARCHER2 part arrived and in early access, and with all the preparation to move from ARCHER to ARCHER2, with staff working remotely from home and all the other work ongoing, why on earth were we preparing for our annual ISO external audit and how could we make it work?...

27 October 2020

Julien Sindt EPCC

One of the upsides of working on the ARCHER2 CSE team is that, sometimes, one finds oneself in the interesting position of being the only user on the ARCHER2 four-cabinet system (at least the only apparent user – I’m sure that AdrianJ is lurking on one of the compute nodes...

29 July 2020

Clair Barrass

On the 14th July 2020 the first four cabinets of ARCHER2 arrived at the ACF and over the following few days they were unpacked, moved into position, connected up and ultimately powered up ready to go. Gregor Muir was on hand, capturing the whole process in still images and timelapse...

27 July 2020

Gregor Muir

A full week on from the 4 Cab Archer2 installation (affectionately dubbed Mini-Archer2, due to only having 18% of the full Shasta system’s 5848 compute nodes), and life is beginning to quiet down again for the temporary Summer Team at the ACF. This is now the second year that the...

17 July 2020

Lorna Smith

Final build day! The final day of the build of our 4 cabinet system. Yesterday saw the system booted and lots of testing carried out. First job of the day was to locate some erroneous cables that were causing power to not be read properly. Troubleshooting then identified the need...

16 July 2020

Lorna Smith

Day 4 and the vast majority of the work has been done, with the power commissioned and the management and storage systems configured and tested. As of last night, remote access was also enabled. As we near completion a number of our American colleagues have now left to return to...

15 July 2020

Lorna Smith

Day 3 and significant progress has been made. Yesterday saw the fitting of the cooling infrastructure between the mountain cabinets and the CDU. The site’s water supply has now been connected, bled and made live. All the power connections have been made and the CDU has been powered up as...

14 July 2020

Lorna Smith

Yesterday saw the first phase of ARCHER2 arrive on site, with all four Shasta Mountain cabinets moved in to their correct position. The images below show pictures of the back and the front of these cabinets. The red and blue cables are colour coded water pipes for the cooling system,...

13 July 2020

Lorna Smith

The first phase of ARCHER2 is in Edinburgh! The 4 cabinet Shasta Mountain system, the first phase of the 23 cabinet system, has completed its journey from Chippewa Falls in Wisconsin, making its way from Prestwick airport to Edinburgh this morning. The arrival of these large crates has, I admit,...

03 July 2020

Lorna Smith

Covid-19 has created significant challenges for the delivery of the new ARCHER2 system. It is therefore really exciting to see the first 4 cabinets of ARCHER2 leave Cray/HPE’s factory in Chippewa Falls, Wisconsin to begin their journey to Edinburgh. ARCHER2 will replace the current ARCHER system, a Cray XC30, as...

19 June 2020

UKRI

UKRI are having weekly meetings with the ARCHER2 providers, EPCC and Cray/HPE. We are making good progress and are still on track to start installation of some compute capacity (a pilot system) in mid-July. We are also looking at further ARCHER extensions due to the COVID-19 delay. We now expect...

26 March 2020

EPCC

UK Research and Innovation (UKRI) has awarded contracts to run elements of the next national supercomputer, ARCHER2, which will represent a significant step forward in capability for the UK’s science community. EPCC has been awarded contracts to run the Service Provision (SP) and Computational Science and Engineering (CSE) services for...

13 March 2020

EPCC

We are pleased to welcome you to the new ARCHER2 website. Here you will find all the information about the service including updates on progress with the installation and setup of the new machine. Some sections of the site are still under development but we are actively working to ensure...

14 October 2019

UKRI

Details of the ARCHER2 hardware which will be provided by Cray (an HPE company). Following a procurement exercise, UK Research and Innovation (UKRI) are pleased to announce that Cray have been awarded the contract to supply the hardware for the next national supercomputer, ARCHER2. ARCHER2 should be capable on average...