Testing GROMACS on ARCHER2 4-cab

By Julien Sindt EPCC on October 27, 2020

Tags:

One of the upsides of working on the ARCHER2 CSE team is that, sometimes, one finds oneself in the interesting position of being the only user on the ARCHER2 four-cabinet system (at least the only apparent user – I’m sure that AdrianJ is lurking on one of the compute nodes somewhere). For context, the ARCHER2 four-cabinet system is roughly equivalent in core-count to the whole of ARCHER, making the ARCHER2 four-cabinet system at least the equal of ARCHER (given the newer CPUs, it’s probably actually a bit more powerful). So what should one do when they have sole and total access to a Tier1 HPC facility, without other users (except AdrianJ – he’s out there somewhere… he always is…) and with a fully empty queue?

I’m sure that, at this point, you’re all screaming your suggestions and, before you ask, I’m not going to be running OpenFOAM uncollated to see whether the system would cope with having to write one file per processor [~128,000 files] per variable (although…). I personally come from a molecular dynamics background so have an affinity for that type of system. Furthermore, I’m aware that the HECBioSim consortium keep a number of ARCHER benchmarks for a couple of codes on their website – it would be interesting to see how those benchmarks perform on the new system. Also, speaking as someone who kept their desktop on for four weeks while waiting for a bubble nucleation simulation to finish running (only to find that the input parameters had been wrong), I’ve got a bit of a vested interest in how well ARCHER2 does with larger MD systems.

Conveniently, I’ve recently compiled a central version of GROMACS. So, a quick download of the GROMACS benchmarks, a couple of minutes of writing a Slurm submission script and we’re off! The smallest system (20k atoms) finishes first – it stops running past 4-nodes… Turns out GROMACS doesn’t like simulating less than 20 atoms per processor. While this is to be expected, it’s also a bit disappointing – I have around a thousand nodes (128,000 cores) to myself and this first benchmark crashes at the 0.4% mark. The next system (61k atoms) doesn’t go much further – it runs on 8 nodes, but is too small to run on 16. Frustrating… Clearly, the ARCHER2 nodes need something larger!

I run the final three systems (465k, 1200k, and 3000k atoms each) but, frankly, I’m only interested in that last one at the moment especially after 465k stops running after 32 nodes (3%… getting there, but still…). Both 1200k and 3000k seem to do well on 64 nodes. OK, how do they compare so far?

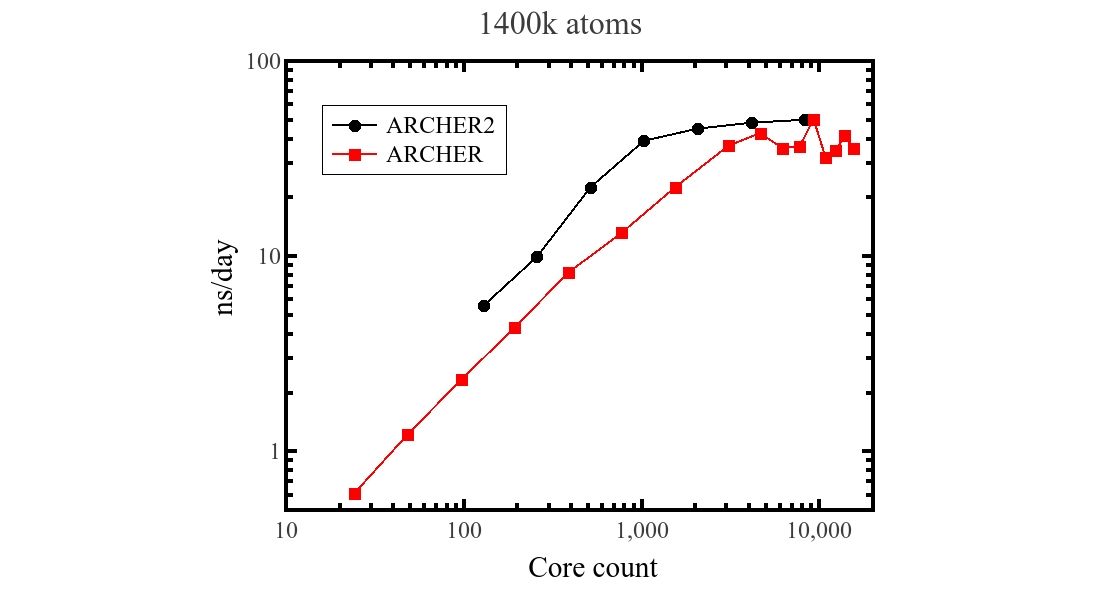

Hmmm… 1400k is going along nicely. There’s some neat linearity in its performance at low node count. However, that scaling seems to disappear past 8 nodes (1024 cores). Performance-wise, it’s looking better than ARCHER on a per-core basis – I make that a roughly 3x increase per core. Part of this speed-up might be down to me using the 2020 version of GROMACS (whereas the HECBioSim benchmarks are run with the 2018 version). However, not all of that speed-up will be due to that. The ARCHER2 cores are just better. The simulation time plateau seems to be at the same sort of speed (~50 ns/day simulated), but ARCHER2 reaches this much more quickly (8 nodes/1024 cores vs 128 nodes/3072 cores on ARCHER). Still, it’s becoming clear that this isn’t the right job to run on the full system…

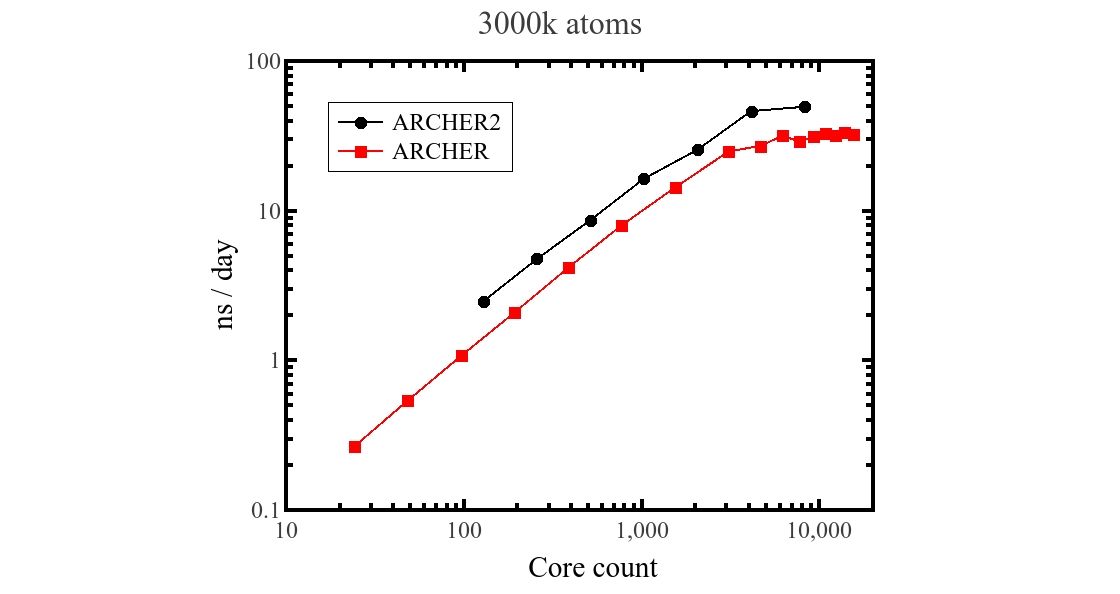

How’s the 3000k system doing? Well, it too is outperforming the ARCHER results (by a factor of ~2x). Interestingly, it plateaus at roughly the same core-count (4096) as the ARCHER results, though the plateau is higher. While the results are good – ARCHER2 is clearly outperforming ARCHER here – I can’t help but feel some disappointment about not being able to run on more than 6% of the system…

I contact one of my friends who puts me on to a massive 12-million-atom benchmark system from the Max Plank Institute – surely that’ll work! I setup my submissions scripts to launch up to half of the ARCHER2 system. It passes 64 nodes… it passes 128 nodes… it passes 256 nodes… It reaches 512 and… crashes…

…

…

The box size is too small to be decomposed over such a large number of cores…

And AdrianJ seems to have woken from his dark slumber and launched a 24-hour full-system job…

P.S. If you have an extra-large system that you’d like to run on the whole of the ARCHER2 four-cabinet system, please get in touch. In the event that I get ARCHER2 to myself again, I’m happy to try to run it!