Scalable and robust Firedrake deployment on ARCHER2 and beyond

ARCHER2-eCSE04-05

PI: Dr David A Ham (Imperial College London)

Co-I(s): Prof Patrick E. Farrell (University of Oxford), Dr Jack Betteridge (Imperial College London)

Technical staff: Dr Jack Betteridge (Imperial College London)

Subject Area:

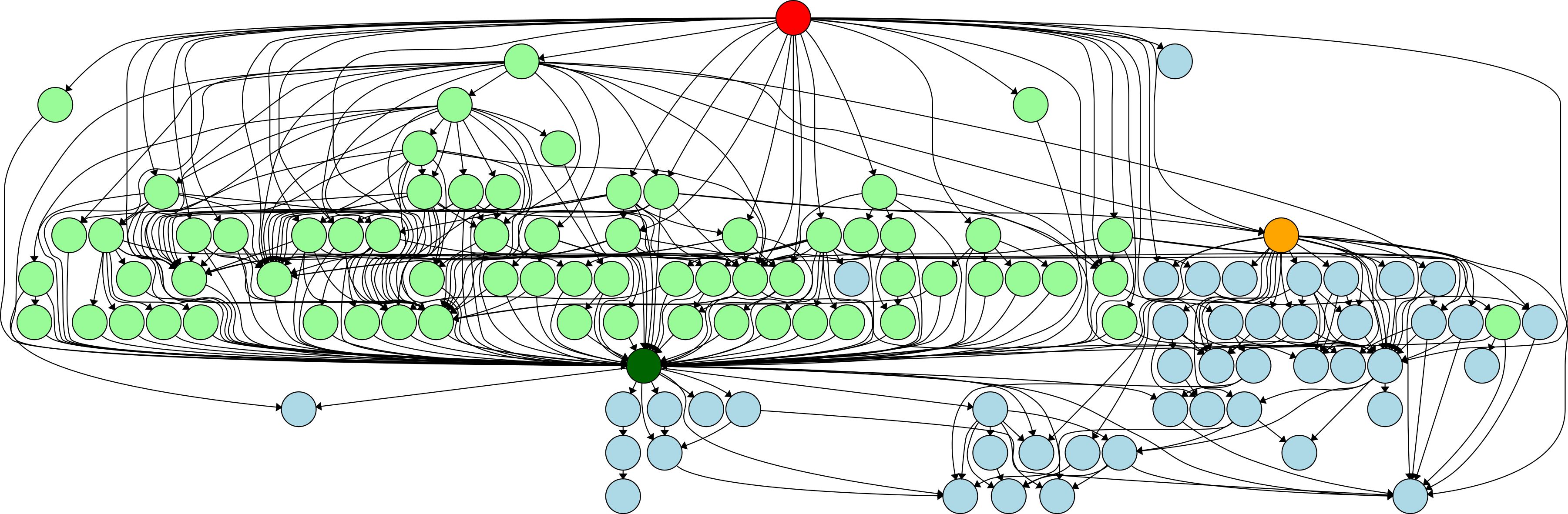

A directed graph showing the complexity of Firedrake’s dependencies. Firedrake is highlighted at the top in red, Python as dark green and Python packages as light green. The orange circle is PETSc and all remaining pale blue circles are compiled dependencies.

Generated by the command: spack graph -d py-firedrake %gcc ^mpich ^openblas > py-firedrake.dot

Spack is a package manager specifically designed for installing popular software on HPC. We have created a package for Spack to install Firedrake, an automated system for the solution of partial differential equations using the finite element method and sophisticated code generation. Through this work we have developed a workflow for using Spack on ARCHER2, which can be utilised by any HPC user. Packages within Spack have been improved, updated and contributed back to the Spack project, which makes packages easier to install on ARCHER2. This work will benefit all users of ARCHER2 who wish to install packages not provided already on the system. In addition, many of Firedrake’s other dependencies have had Spack packages developed or modified to allow for the easy installation of Firedrake. Furthermore, we have created Spack packages for some applications that themselves depend on Firedrake, so that users of these tools can install the software and immediately start doing science, these include (amongst others):

- Gusto - a Firedrake dynamical core toolkit for weather modelling.

- Thetis - an unstructured grid coastal ocean model.

- Icepack - a package for solving the equations of motion of glacier flow using Firedrake.

The availability of a Spack package will save huge amounts of person time performing bespoke Firedrake installations and even allows for system level installations, where Spack is centrally supported.

Docker is a popular container environment for users on their own local machines, but is not suitable for HPC environments as it allows for privilege escalation. Singularity is an alternative to Docker designed for HPC and is installed as a module on ARCHER2, but it is not possible for users to build their own images from scratch. We have developed a Singularity image for Firedrake based on the Firedrake Docker images so that end users who do not wish to modify the Firedrake source can have a zero installation route to running their Firedrake scripts. These Singularity images have been tested to make sure they are suitable for use on ARCHER2 and remain performant.

PETSc is a key Firedrake dependency and provides a lot of the solvers used for simulations. During the course of the eCSE we identified a parallel deadlock bug, which occurs when Python tries to clean up memory belonging to petsc4py on some ranks, but not others. The frequency of this bug occurring increases with the number of MPI ranks, meaning it is more prevalent when running on large HPC facilities (like ARCHER2). We have designed an algorithm for the safe parallel collection of distributed Python objects, like those found in petsc4py. An implementation of this algorithm has been contributed to PETSc to prevent deadlock occurring when using PETSc in combination with other memory managed languages, such as Python or Julia.

Information about the code

All three projects are open source:

Firedrake:

Spack:

PETSc and petsc4py

Singularity is already installed on ARCHER2 and is available as a module. The Firedrake Singularity image will be made available once a suitable host is found, but can presently be converted from a Docker image.

Furthermore, there are extensive instructions on how to install Firedrake using Spack as a working document available and use the singularity image

These will be incorporated into the Firedrake wiki once various upstream changes have been made.