Unleashing the Potential for Superior Parallel Performance of the ONETEP Linear-Scaling Density Functional Theory Package on ARCHER2

ARCHER2-eCSE03-05

PI: Prof Nicholas D M Hine (University of Warwick)

Technical staff: Dr Christopher S Brady (University of Warwick), Dr Heather Ratcliffe (University of Warwick).

Subject Area:

The ONETEP Linear-Scaling Density Functional Theory (LSDFT) package [1,2] is a fully-featured ab initio electronic structure package suited to large-scale atomistic simulations of systems such as nanomaterials, crystalline interfaces, and biological materials. It has been developed over the last 15 years by a group of academics, the ONETEP Developers Group (ODG), based at leading UK universities including Warwick, Imperial, Cambridge and Southampton. It has a strong user community both in academia and industry, and is available via a free academic license to UK academics or a low-cost license to other academics, as well as via DS Biovia’s Materials Studio package. The parallel scaling in the early days of ARCHER2, obtained by initial measurements utilising hybrid MPI/OpenMP parallelism, demonstrated that while the code exhibited what constituted respectable scaling on the previous ARCHER service, it was vital that parallel algorithms were redeveloped to run efficiently on ARCHER2, and to take advantage of the large capacity of individual nodes with 128 cores.

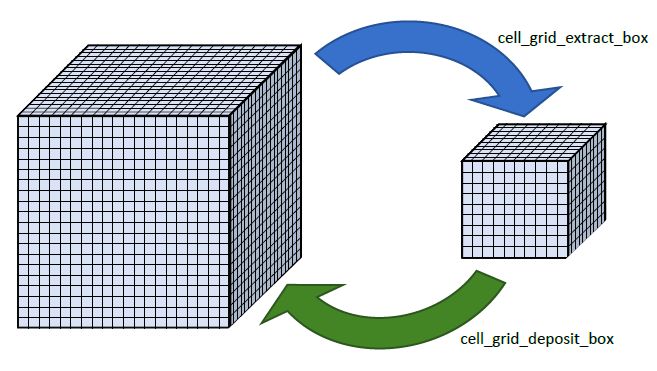

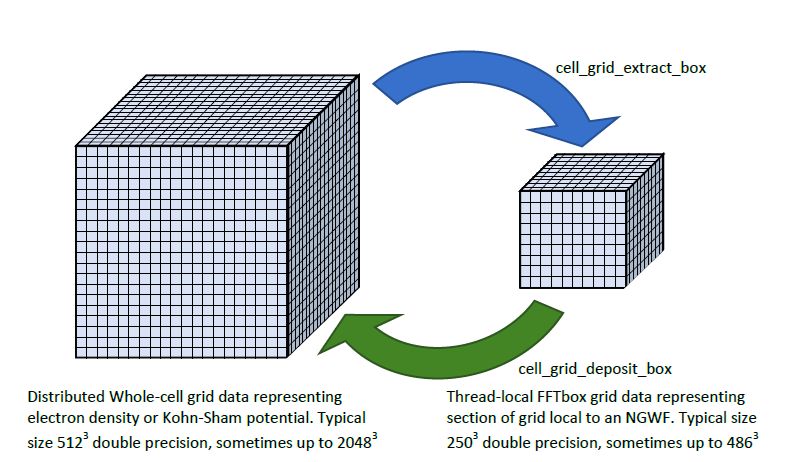

This eCSE project aimed to provide a dramatic upgrade to the parallel scaling of the ONETEP Theory code, when run on state-of-the-art parallel computing environments such as ARCHER2. Large nodes make novel demands of the parallel algorithms, exposing algorithmic issues that have not previously been problematic. Work done in the past, particularly around the time of first use on the ARCHER supercomputer [3,4], resulted in parallel scaling in ONETEP that is, at first, reasonably good with total core count: a nearly perfect scaling regime exists for a large (1000+ atom) job on up to around 1000 cores. However, beyond that, parallel efficiency began to drop rapidly, and we aimed to address this via work packages on whole-cell grid (see Figure 1) and sparse matrix routines respectively. A further work package involved code sustainability and benchmarking activities including documenting best practice when running at very large scale with hybrid MPI/OpenMP parallelism. While all the project objectives relating to code implementation and dissemination were achieved, the parallel performance gains of the implemented approaches were not as high as might have been hoped, because of technical limitations of the One-Sided communications routines within the available MPI libraries on ARCHER2 and other available systems. However, the project fed well into further developments as part of the Software for Research Communities EPSRC project that took parallel scaling development in a different direction, including developments aimed at porting to GPUs. This follow-on work has made great progress on optimising the same basic operations and improving overall speed to the degree envisaged in this eCSE project, but by a different approach (see, for example fig 21 at ref [5]).

Bibliography

- [1] Introducing ONETEP: Linear-scaling density functional simulations on parallel computers, C.-K. Skylaris, P. D. Haynes, A. A. Mostofi and M. C. Payne, J. Chem. Phys. 122, 084119 (2005).

- [2] The ONETEP linear-scaling density functional theory program, J. C. A. Prentice, …, N. D. M. Hine, …, et al (36 authors), J. Chem. Phys. 152, 174111 (2020)

- [3] Linear-scaling density-functional theory with tens of thousands of atoms: Expanding the scope and scale of calculations with ONETEP, N. D. M. Hine, P. D. Haynes, A. A. Mostofi, C.-K. Skylaris and M. C. Payne, Comput. Phys. Commun. 180, 1041 (2009)

- [4] Hybrid MPI-OpenMP parallelism in the ONETEP linear-scaling electronic structure code: Application to the delamination of cellulose nano-fibrils, K. A. Wilkinson, N. D. M. Hine, and C.-K. Skylaris, J. Chem. Theory Comput. 10, 4782(2014).

- [5] ONETEP docs

Information about the code

ONETEP is compiled as a module on ARCHER and can be used by any researchers with a licence. Licenses can be obtained from Cambridge Enterprise and are free to academics worldwide