Upscaling ExaHyPE

ARCHER2-eCSE04-02

PI: Dr Tobias Weinzierl (University of Durham)

Technical staff: Dr Holger Schulz (University of Durham); Adam Tuft (University of Durham)

Subject Area:

Exascale computers obtain their power from GPGPU accelerators and an explosion of their core-per-node count. For the latter reason, it is essential that modern HPC codes are able to harvest many cores. Since the classic fork-join model struggles to achieve this – there are always imbalances which lead to strong scaling effects – task-based programming is becoming increasingly attractive. With the introduction of tasks, codes however run the risk of introducing NUMA penalties, increasing the memory movements, over-synchronising threads, and continuing to suffer from fork-join imbalances if the runtime fails to use thread wait times to process pending tasks.

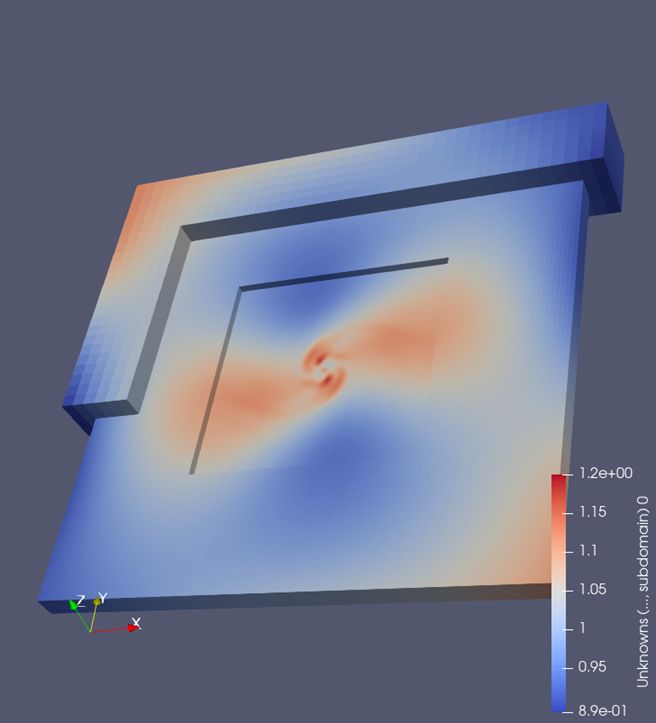

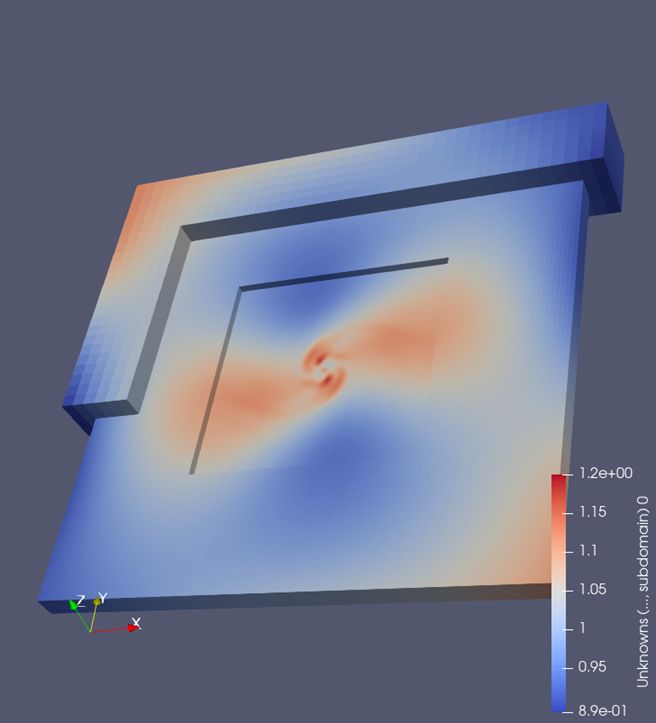

We study ExaHyPE, a code employing dynamic AMR to solve wave phenomena as they describe gravitational or seismic waves. Our work proposed to introduce “manual” task queues on top of the MPI runtime, which explicitly take NUMA penalties into account and allow us to control whether a thread wait should either actively wait for its companions to terminate (to reduce the algorithmic latency) or rather process as many tasks as possible meanwhile (to increase the throughput). The introduction of smart pointers helps us to reduce the memory movements despite the additional task queues. We assume that these implementation patterns, rationale and idioms are important for a wide range of applications which have to deal with very dynamic, unpredictable workloads. In an era where the further upscaling is constrained by power, and the electricity cost of supercomputing becomes a challenge for our society, increasing the efficiency of simulation codes becomes mandatory for supercomputer users.

With the proposed alterations of the tasking runtime, the team has managed to use the ExaHyPE code to run astrophysical challenges with unprecedented compute efficiency. Furthermore, they have started to discuss the proposed challenges with the communities writing mainstream tasking runtimes. Therefore, we hope that our alterations, in the long term, might find a way into mainstream off-the-shelf software such as the OpenMP runtime.

Two merging black holes.

Such simulations require very efficient tasking since they employ multiple physical models over a dynamically adaptive mesh.

Work by Han Zhang and Baojiu Li (ICC, Durham) exploiting eCSE research.

- [1] H. Schulz, G. Brito Gadeschi, O. Rudyy, T. Weinzierl: Task Inefficiency Patterns for a Wave Equation Solver. In S. McIntosh-Smith, B. R. de Supinski, J. Klinkenberg: OpenMP: Enabling Massive Node-Level Parallelism, Springer, pp. 111-124 (2021)

- [2] B. Li, H. Schulz, T. Weinzierl, H. Zhang: Dynamic task fusion for a block-structured finite volume solver over a dynamically adaptive mesh with local time stepping. ISC High Performance 2022, LNCS 13289, pp. 153-173 (2022)

- [3] H. Zhang, T. Weinzierl, H. Schulz, B. Li: Spherical accretion of collisional gas in modified gravity I: self-similar solutions and a new cosmological hydrodynamical code. Monthly Notices of the Royal Astronomical Society, 515(2), pp. 2464-2482 (2022)

- [4] M. Wille, T. Weinzierl, G. Brito Gadeschi, M. Bader: Efficient GPU Offloading with OpenMP for a Hyperbolic Finite Volume Solver on Dynamically Adaptive Meshes. ISC High Performance 2023, LNCS (2023) – accepted

Information about the code

Peano is available from the Peano website. ExaHyPE 2 is an extension to Peano which is shipped with the core repository. All modifications made due to the eCSE project are merged into the main Peano branch.

The flaws behind OpenMP and the proposed extensions are described in two ISC publications (see below). The realisation of the workarounds can be found in Peano’s technical architecture (namespace tarch/multicore). We also offer TBB, SYCL and C++ back-ends using the technologies as discussed here, though the eCSE project did focus on OpenMP.