Future-proof parallelism in CASTEP

ARCHER2-eCSE03-15

PI: Dr Phil Hasnip (University of York)

Co-I(s): Mr Matthew Smith (University of Cambridge, now University of York)

Technical staff: Ed Higgins and Ben Durham (University of York)

Subject Area:

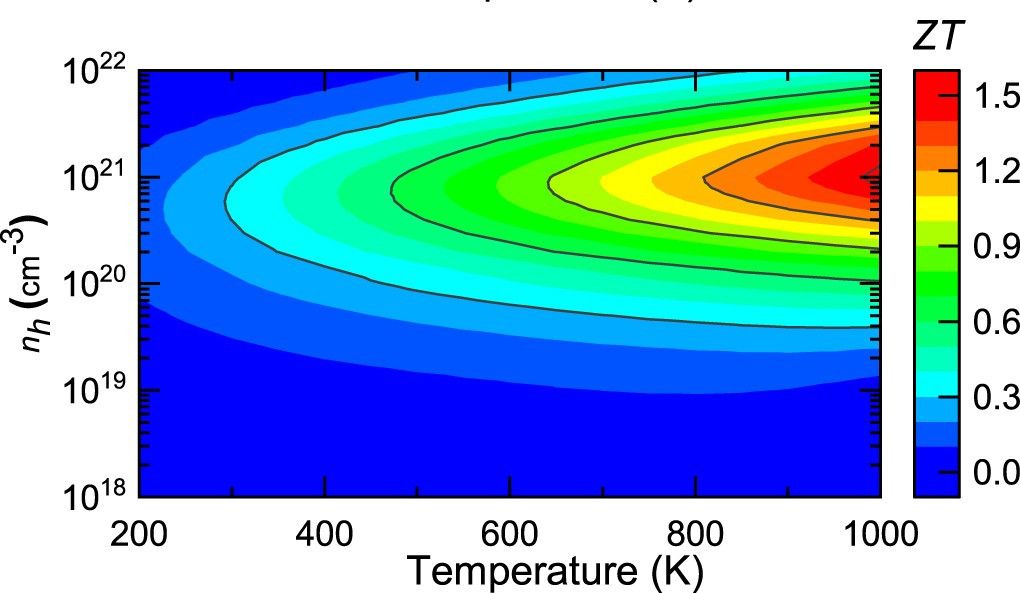

This image shows the thermoelectric figure of merit, ZT, for a then-proposed (since discovered) new material TaFeSb. This is published in:

G. Naydenov et al., J. Phys. Mater. 2, 035002 (2019)

doi: 10.1088/2515-7639/ab16fb

CASTEP is a UK flagship materials modelling software package, which uses Density Functional Theory (DFT) to predict and explain mechanical, physical, electronic and chemical properties of materials. It is used by thousands of academic and industrial research groups across more than 60 countries, and heavily used on ARCHER2, where it routinely exceeds 5% of total monthly usage. The aim of this eCSE project was to substantially improve the parallel performance of CASTEP, reducing the time-to-science and extending the range of compute cores which may be used efficiently. This was to be achieved by re-engineering CASTEP’s main distributed memory parallel decomposition, and extending and optimising CASTEP’s shared memory parallelisation.

DFT is a form of quantum mechanics, which has become one of the most widely used research tools for studying the behaviour of matter at the nanoscale. In principle the properties of materials could be predicted by solving the many-electron Schrödinger equation, but solving it directly is not practical for systems of more than a few electrons, as the computer memory required for such problems scales exponentially with the number of electrons. Instead, the fundamental DFT theorems prove that all ground state properties of a system depend only on the electron density ρ(r), which does not have a problematic memory scaling with the number of electrons. The energy functional is of particular interest, as by knowing the energy and its derivatives one can predict the lowest energy (ground state) structure of a material or molecule, which is the most stable state and therefore the state most likely to be found in nature under ambient conditions. The exact energy functional is unknown, but relatively simple approximations can yield highly accurate results, and this favourable balance of computational cost and accuracy has led to approximately 30,000 papers using DFT published each year (of which CASTEP users account for roughly 10%).

The atoms in materials are typically arranged in periodically repeating structures, and CASTEP uses periodic boundary conditions when solving the Kohn-Sham equations (the crucial equations at the heart of DFT). The solutions to these equations are the Kohn-Sham wavefunctions, which in CASTEP are represented as weighted sums of periodic 3D plane-waves—the so-called 3D Fourier basis. The plane-waves must have the same periodicity as the simulated atomic structure, and this restricts the permitted plane waves to those whose wave-vectors lie on a discrete 3D grid. Thus the Kohn-Sham wavefunctions are typically represented as a set of coefficients for each point on this plane-wave (Fourier) grid. When solving the Kohn-Sham equations, some operations are very efficient in this plane-wave basis; however, other operations are much more efficient to carry out with wavefunctions represented by values on a 3D spatial grid (“real-space”). During each CASTEP simulation, Fast Fourier Transforms (FFTs) are used to efficiently transform the wavefunction from the plane-wave representation to the real-space representation, and back again.

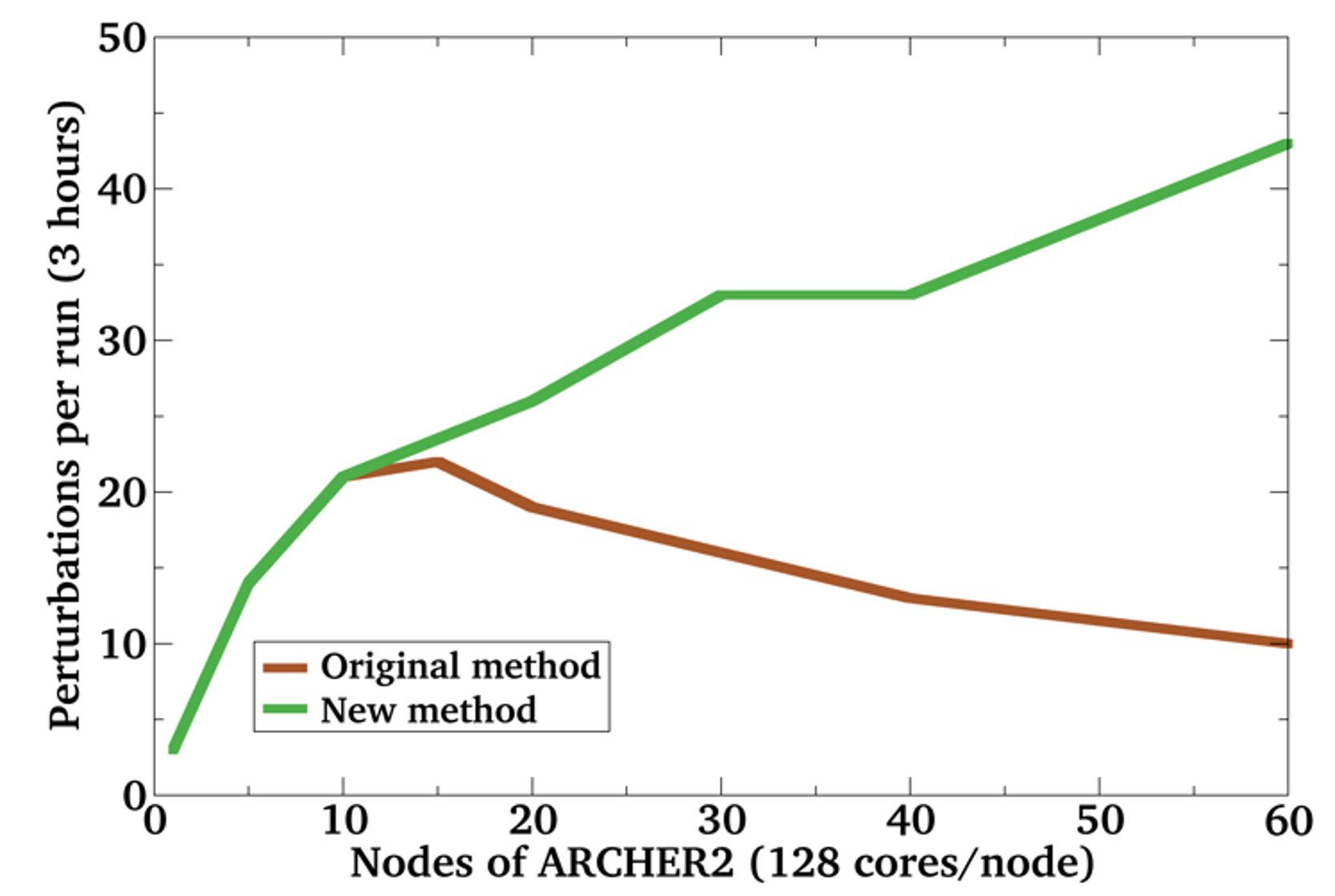

When running large simulations on a parallel computer, the most common data decomposition is to distribute the Fourier grid over MPI ranks, but the resultant parallel 3D FFT limits its strong scaling. This limitation is due to the all-to-all communications required by the parallel 3D FFT algorithm, which generate a large number of small simultaneous messages, which in turn places a large burden on the network. For N MPI processes in the Fourier parallelism, all N processes exchange data so the total number of messages scales as N2, and the message length scales as 1/N2. As N increases, the message size decreases and eventually the communication time is dominated by the network interconnect latency; furthermore, the quadratic increase in the number of messages leads to contention for shared network resources, in particular the Network Interface Controllers (NICs). This causes issues on ARCHER2, which has 128 physical cores per node which all share two NICs.

In this work, CASTEP’s parallel efficiency was improved by redesigning the way the 3D Fourier grid is distributed over the MPI ranks to substantially reduce the number of messages which are sent during the parallel FFT. The fundamental FFT operation is the same, which means that the same total data is communicated, so the reduction in the number of messages means that each message is larger than before. When the number of MPI ranks, N, is small, this change leaves the communications largely unchanged, but as N increases the longer messages mean that CASTEP stays in the efficient bandwidth-limited regime for longer, and the reduction in the number of messages reduces the network contention. The new parallel method leads to exactly the same CASTEP results, but in significantly less time on large parallel machines such as ARCHER2, and it also allows CASTEP to use far larger numbers of cores effectively. Recent benchmark calculations to compute a thermoelectric materials’ vibrational properties (“phonons”) show more than six times as many cores may be used efficiently.

The plane-wave parallelism may be combined with shared-memory parallelism (MPI+OpenMP) to reduce memory overhead and improve parallel scaling further. This project optimised several of CASTEP’s shared-memory parallel operations, improving the OpenMP scaling of one key matrix-matrix multiplication operation by a factor of four.

The new code has been included in the 2024 release of CASTEP, making it available to users worldwide, and optimised further in the 2025 release. Key beneficiaries of this project include the CCP-NC, CCP9 and UKCP HEC communities, who carry out large scale simulations on ARCHER2 as well as UK Tier-2 HPC facilities such as Young, run by the MMM Hub. However, the impact and benefits of this work will extend far beyond the UK HPC community. CASTEP is used by many industrial research sites as well as academic research groups worldwide. In the last 5 years alone, CASTEP has been licensed to over 500 industrial sites, including many household names (e.g. Honda, Panasonic, AstraZeneca and Toshiba), and supported over 30 patent applications. The work in this project will thus benefit all parallel CASTEP simulations, in both academic and industrial research settings.

Information about the code

CASTEP is a supported application on ARCHER2, and available via the module system to any licensed users. CASTEP is distributed under a cost-free licence to any academic groups worldwide.