- Current System Load - CPU, GPU

- Service Alerts

- Maintenance Sessions

- Previous Service Alerts

- System Status Mailings

- FAQ

- Usage statistics

Current System Load - CPU

The plot below shows the status of nodes on the current ARCHER2 Full System service. A description of each of the status types is provided below the plot.

- alloc: Nodes running user jobs

- idle: Nodes available for user jobs

- resv: Nodes in reservation and not available for standard user jobs

- plnd: Nodes are planned to be used for a future jobs. If pending jobs can fit in the space before the future job is due to start they can run on these nodes (often referred to as backfilling).

- down, drain, maint, drng, comp, boot: Nodes unavailable for user jobs

- mix: Nodes in multiple states

Note: the long running reservation visible in the plot corresponds to the short QoS which is used to support small, short jobs with fast turnaround time.

Current System Load - GPU

- alloc: Nodes running user jobs

- idle: Nodes available for user jobs

- resv: Nodes in reservation and not available for standard user jobs

- plnd: Nodes are planned to be used for a future jobs. If pending jobs can fit in the space before the future job is due to start they can run on these nodes (often referred to as backfilling).

- down, drain, maint, drng, comp, boot: Nodes unavailable for user jobs

- mix: Nodes in multiple states

Service Alerts

The ARCHER2 documentation also covers some Known Issues which users may encounter when using the system.

| Status | Type | Start | End | Scope | User Impact | Reason |

|---|---|---|---|---|---|---|

| Planned | Service Alert | 2026-03-10 00:00 | 2026-03-10 00:00 | ARCHER2 Service Planned Maintenance outage | The ARCHER2 Service will be down from 08:00 to midnight to allow the certificates to be updated | Certificate update |

| Planned | Service Alert | 2025-10-16 00:00 | License server availability | There will be an interruption to license server availability of up to two hours - time TBC | License server work |

Maintenance Sessions

This section lists recent and upcoming maintenance sessions. A full list of past maintenance sessions is available.

No scheduled or recent maintenance sessions

Previous Service Alerts

This section lists the five most recent resolved service alerts from the past 30 days. A full list of historical resolved service alerts is available.

| Status | Type | Start | End | Scope | User Impact | Reason |

|---|---|---|---|---|---|---|

| Resolved | Service Alert | 2026-02-18 08:30 | 2026-02-18 11:30 | ARCHER2 scheduling | Low probability risk of issues with scheduling or running jobs | High priority security update |

| Resolved | Service Alert | 2026-02-10 09:00 | 2026-02-10 10:37 | ARCHER2 service power | Very low risk of loss of power to login nodes, management nodes, file systems | Test of on-site generator |

| Resolved | Issue | 2026-02-10 16:00 | 2026-02-11 12:55 | Compute nodes | All work is now running as normal once more. | New work was prevented from starting due to a cooling issue. Work was restarted once the initial issue was fixed. Further work on the cooling system will be completed on Wednesday morning, 11th February. |

| Resolved | Service Alert | 2026-02-02 13:44 | 2026-02-02 14:31 | Compute nodes | New and pending jobs will remain queued and not run on ARCHER2 until the issue is resolved. | There's an ongoing issue with the cooling pump, which is being actively investigated. |

| Resolved | Service Alert | 2026-01-21 18:30 | 2026-02-05 16:30 | ARCHER2 cooling | Low probability risk of requirement to throttle compute for ARCHER2 to reduce cooling load | Maintenance being carried out on one of 3 pumps that cool ARCHER2 and Cirrus. The other 2 pumps will provide cooling unless there is a coincidental failure of one of the 2 remaining pumps. |

System Status mailings

If you would like to receive email notifications about system issues and outages, please subscribe to the System Status Notifications mailing list via SAFE

FAQ

Usage statistics

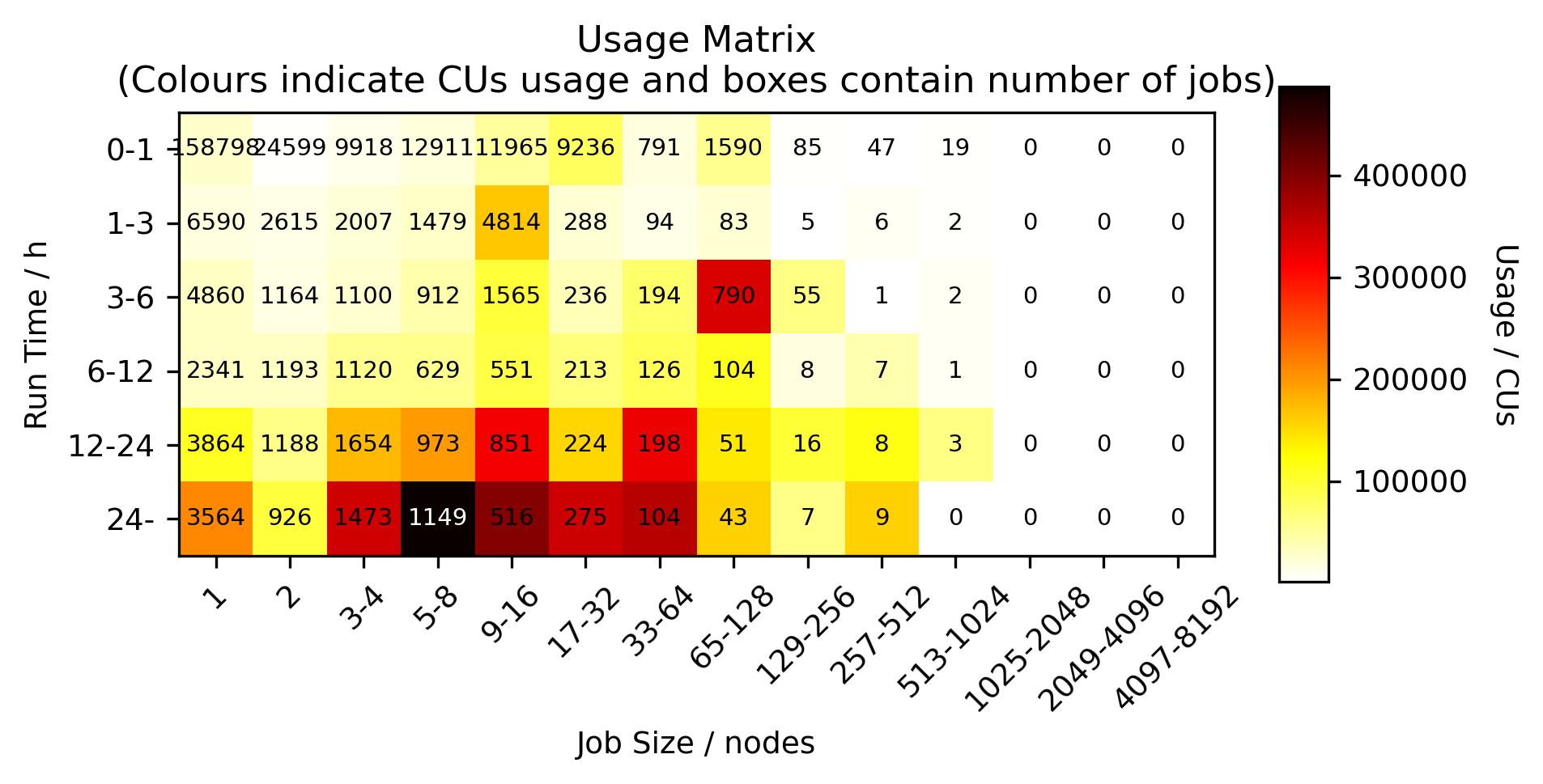

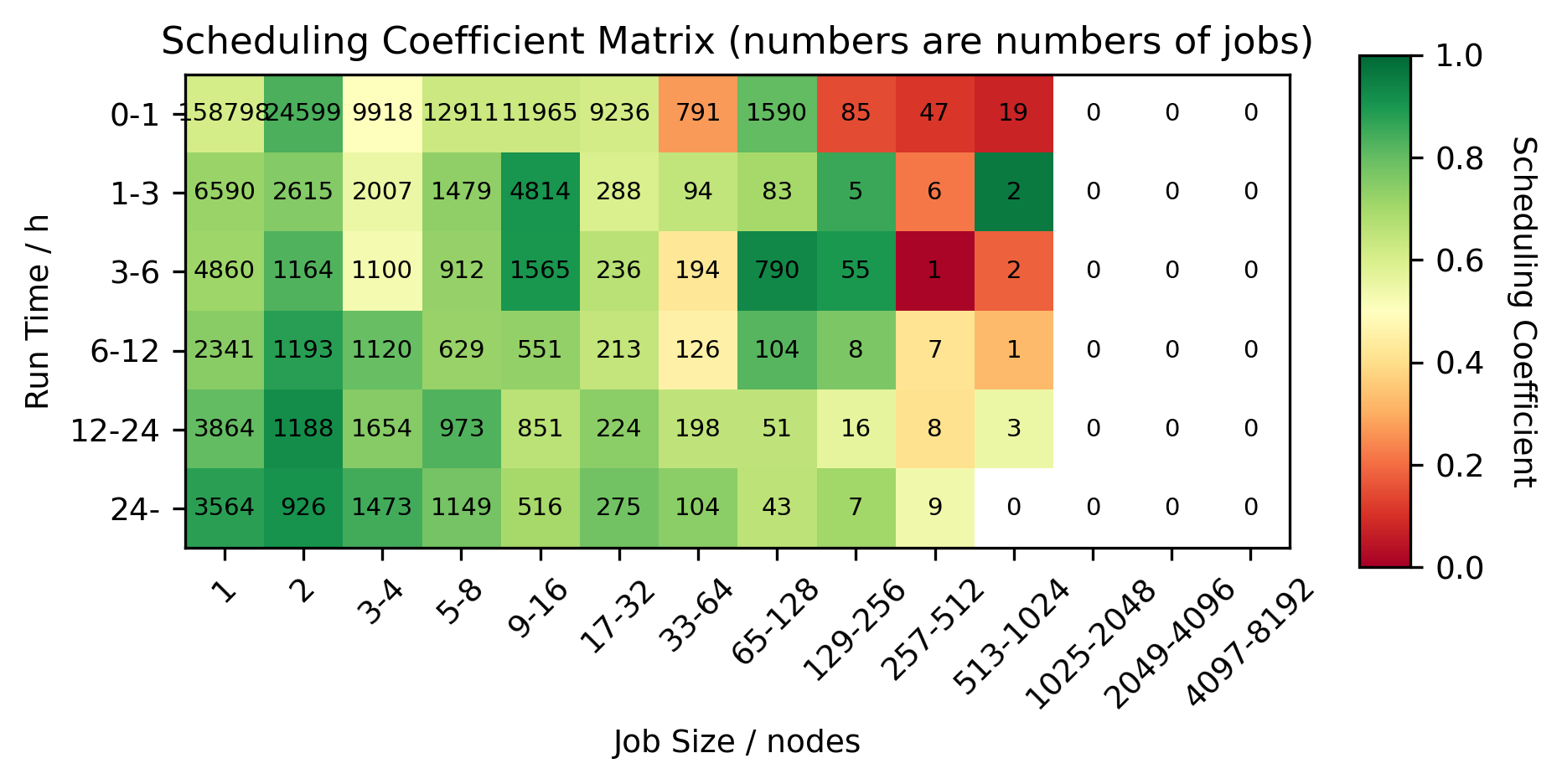

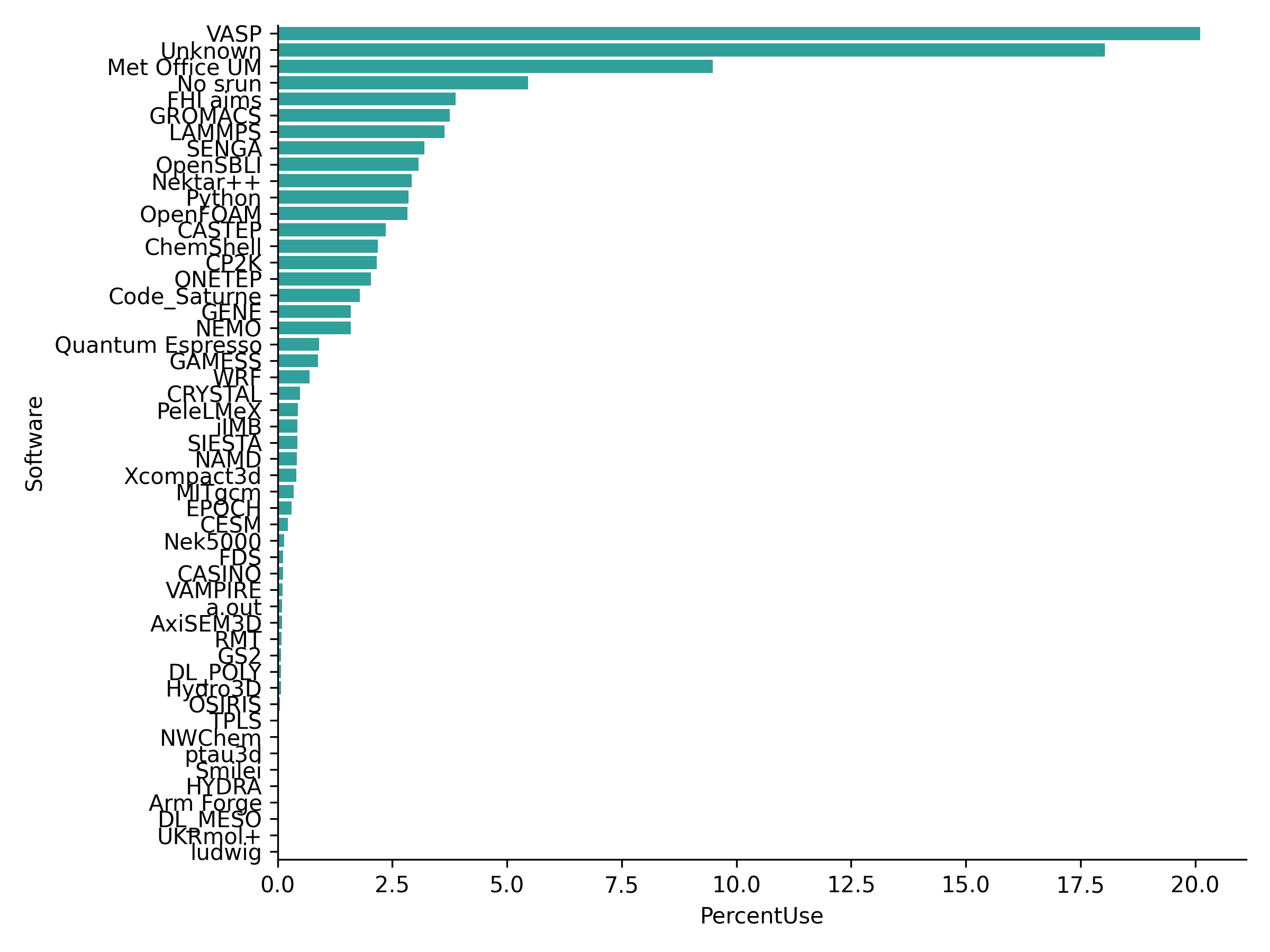

This section contains data on ARCHER2 usage for Jan 2026. Access to historical usage data is available at the end of the section.

Usage by job size and length

Queue length data

The colour indicates scheduling coefficient which is computed as [run time] divided by [run time + queue time]. A scheduling coefficient of 1 indicates that there was zero time queuing, a scheduling coefficient of 0.5 means that the job spent as long queuing as it did running.

Software usage data

Plot and table of % use and job step size statistics for different software on ARCHER2 for Jan 2026. This data is also available as a CSV file.

This table shows job step size statistics in cores weighted by usage, total number of job steps and percent usage broken down by different software for Jan 2026.

| Software | Min | Q1 | Median | Q3 | Max | Jobs | Nodeh | PercentUse | Users | Projects |

|---|---|---|---|---|---|---|---|---|---|---|

| Overall | 0 | 512.0 | 1024.0 | 6165.0 | 131072 | 2612235 | 4408610.9 | 100.0 | 978 | 125 |

| VASP | 1 | 512.0 | 512.0 | 1792.0 | 23040 | 106683 | 886382.6 | 20.1 | 139 | 14 |

| Unknown | 1 | 512.0 | 1920.0 | 6400.0 | 99104 | 733058 | 794869.1 | 18.0 | 512 | 94 |

| Met Office UM | 26 | 576.0 | 1296.0 | 6165.0 | 7320 | 25300 | 418021.1 | 9.5 | 43 | 2 |

| No srun | 0 | 384.0 | 2048.0 | 10240.0 | 131072 | 113050 | 240887.4 | 5.5 | 708 | 98 |

| FHI aims | 1 | 512.0 | 1024.0 | 1024.0 | 8192 | 88893 | 171081.4 | 3.9 | 33 | 6 |

| GROMACS | 1 | 512.0 | 1024.0 | 1024.0 | 2560 | 27422 | 165316.9 | 3.7 | 40 | 7 |

| LAMMPS | 1 | 128.0 | 320.0 | 768.0 | 131072 | 38045 | 160531.9 | 3.6 | 63 | 19 |

| SENGA | 100 | 8192.0 | 8192.0 | 8192.0 | 8192 | 84 | 141116.4 | 3.2 | 4 | 3 |

| OpenSBLI | 128 | 64000.0 | 64000.0 | 64000.0 | 131072 | 20 | 135268.7 | 3.1 | 4 | 3 |

| Nektar++ | 1 | 5120.0 | 8192.0 | 10240.0 | 16384 | 258 | 128893.0 | 2.9 | 8 | 2 |

| Python | 1 | 1.0 | 1024.0 | 9216.0 | 16384 | 1093932 | 125625.4 | 2.8 | 62 | 25 |

| OpenFOAM | 1 | 512.0 | 1024.0 | 3072.0 | 25600 | 4379 | 124907.9 | 2.8 | 54 | 19 |

| CASTEP | 1 | 512.0 | 576.0 | 960.0 | 1152 | 31042 | 103648.1 | 2.4 | 33 | 4 |

| ChemShell | 1 | 1024.0 | 1024.0 | 12800.0 | 12800 | 3272 | 96331.1 | 2.2 | 19 | 4 |

| CP2K | 1 | 484.0 | 768.0 | 1024.0 | 32768 | 22721 | 95478.2 | 2.2 | 37 | 10 |

| ONETEP | 1 | 256.0 | 256.0 | 320.0 | 2048 | 2641 | 89510.0 | 2.0 | 8 | 2 |

| Code_Saturne | 1 | 8192.0 | 8192.0 | 8192.0 | 32768 | 261 | 79202.5 | 1.8 | 8 | 3 |

| GENE | 1 | 8192.0 | 8192.0 | 10240.0 | 20480 | 4319 | 70252.8 | 1.6 | 8 | 3 |

| NEMO | 1 | 480.0 | 1188.0 | 4060.0 | 5504 | 121732 | 70200.8 | 1.6 | 26 | 6 |

| Quantum Espresso | 4 | 384.0 | 512.0 | 1024.0 | 3840 | 5223 | 39607.6 | 0.9 | 43 | 12 |

| GAMESS | 128 | 128.0 | 128.0 | 128.0 | 128 | 543 | 38694.5 | 0.9 | 9 | 1 |

| WRF | 16 | 384.0 | 384.0 | 384.0 | 384 | 180 | 30595.0 | 0.7 | 4 | 1 |

| CRYSTAL | 64 | 128.0 | 512.0 | 1024.0 | 1536 | 1368 | 21395.7 | 0.5 | 5 | 3 |

| PeleLMeX | 128 | 2048.0 | 3072.0 | 4096.0 | 8192 | 145 | 19627.8 | 0.4 | 4 | 1 |

| iIMB | 2304 | 2304.0 | 2304.0 | 2304.0 | 2304 | 141 | 19101.0 | 0.4 | 1 | 1 |

| SIESTA | 9 | 2304.0 | 2304.0 | 2304.0 | 2304 | 301 | 18823.8 | 0.4 | 2 | 2 |

| NAMD | 72 | 512.0 | 512.0 | 512.0 | 1024 | 9035 | 18354.5 | 0.4 | 4 | 1 |

| Xcompact3d | 1 | 16384.0 | 16384.0 | 16384.0 | 16384 | 63 | 18071.6 | 0.4 | 7 | 4 |

| MITgcm | 4 | 112.0 | 240.0 | 384.0 | 1000 | 14478 | 15305.4 | 0.3 | 11 | 3 |

| EPOCH | 128 | 1280.0 | 1920.0 | 32768.0 | 32768 | 688 | 13225.9 | 0.3 | 8 | 1 |

| CESM | 1 | 512.0 | 1536.0 | 1920.0 | 5760 | 431 | 9751.4 | 0.2 | 10 | 2 |

| Nek5000 | 1024 | 1024.0 | 1024.0 | 2048.0 | 2048 | 50 | 6233.5 | 0.1 | 2 | 1 |

| FDS | 248 | 248.0 | 440.0 | 440.0 | 440 | 78 | 5355.1 | 0.1 | 1 | 1 |

| CASINO | 128 | 1024.0 | 1024.0 | 2048.0 | 2048 | 339 | 5207.9 | 0.1 | 1 | 1 |

| VAMPIRE | 128 | 1024.0 | 1024.0 | 1024.0 | 1024 | 751 | 4725.1 | 0.1 | 4 | 2 |

| a.out | 1 | 256.0 | 1152.0 | 2048.0 | 4096 | 1078 | 4081.9 | 0.1 | 13 | 9 |

| AxiSEM3D | 16 | 1280.0 | 2560.0 | 2560.0 | 5120 | 620 | 4020.5 | 0.1 | 2 | 1 |

| RMT | 1280 | 1792.0 | 1792.0 | 2560.0 | 7680 | 107 | 3842.2 | 0.1 | 2 | 1 |

| GS2 | 512 | 2064.0 | 2064.0 | 2160.0 | 2160 | 96 | 3150.1 | 0.1 | 4 | 2 |

| DL_POLY | 2 | 1024.0 | 1024.0 | 1024.0 | 1280 | 1645 | 3149.9 | 0.1 | 3 | 2 |

| Hydro3D | 120 | 700.0 | 2000.0 | 2000.0 | 2000 | 25 | 2968.6 | 0.1 | 2 | 2 |

| OSIRIS | 1024 | 1024.0 | 1024.0 | 2048.0 | 2048 | 4 | 2232.3 | 0.1 | 1 | 1 |

| TPLS | 128 | 2048.0 | 4096.0 | 4096.0 | 4096 | 154 | 1381.5 | 0.0 | 2 | 1 |

| NWChem | 16 | 128.0 | 128.0 | 128.0 | 256 | 157131 | 1309.1 | 0.0 | 10 | 5 |

| ptau3d | 4 | 160.0 | 160.0 | 160.0 | 160 | 99 | 456.1 | 0.0 | 3 | 2 |

| Smilei | 8 | 128.0 | 128.0 | 128.0 | 256 | 41 | 235.5 | 0.0 | 3 | 1 |

| HYDRA | 1 | 30.0 | 30.0 | 30.0 | 1280 | 182 | 92.2 | 0.0 | 9 | 5 |

| Arm Forge | 1 | 256.0 | 256.0 | 256.0 | 1280 | 61 | 64.6 | 0.0 | 12 | 8 |

| DL_MESO | 32 | 32.0 | 32.0 | 64.0 | 64 | 24 | 23.6 | 0.0 | 1 | 1 |

| UKRmol+ | 1 | 1.0 | 2.0 | 2.0 | 4 | 16 | 1.3 | 0.0 | 1 | 1 |

| ludwig | 2 | 8.0 | 8.0 | 8.0 | 8 | 26 | 0.4 | 0.0 | 3 | 3 |