Developing in-situ analysis capabilities for pre-Exascale simulations with Xcompact3D

ARCHER2-eCSE03-02

PI: Dr Sylvain Laizet (Imperial College London)

Co-I(s): Dr Charles Moulinec (STFC), Dr Michele Weiland (EPCC, University of Edinburgh)

Technical staff: Dr Paul Bartholomew (EPCC, University of Edinburgh)

Subject Area:

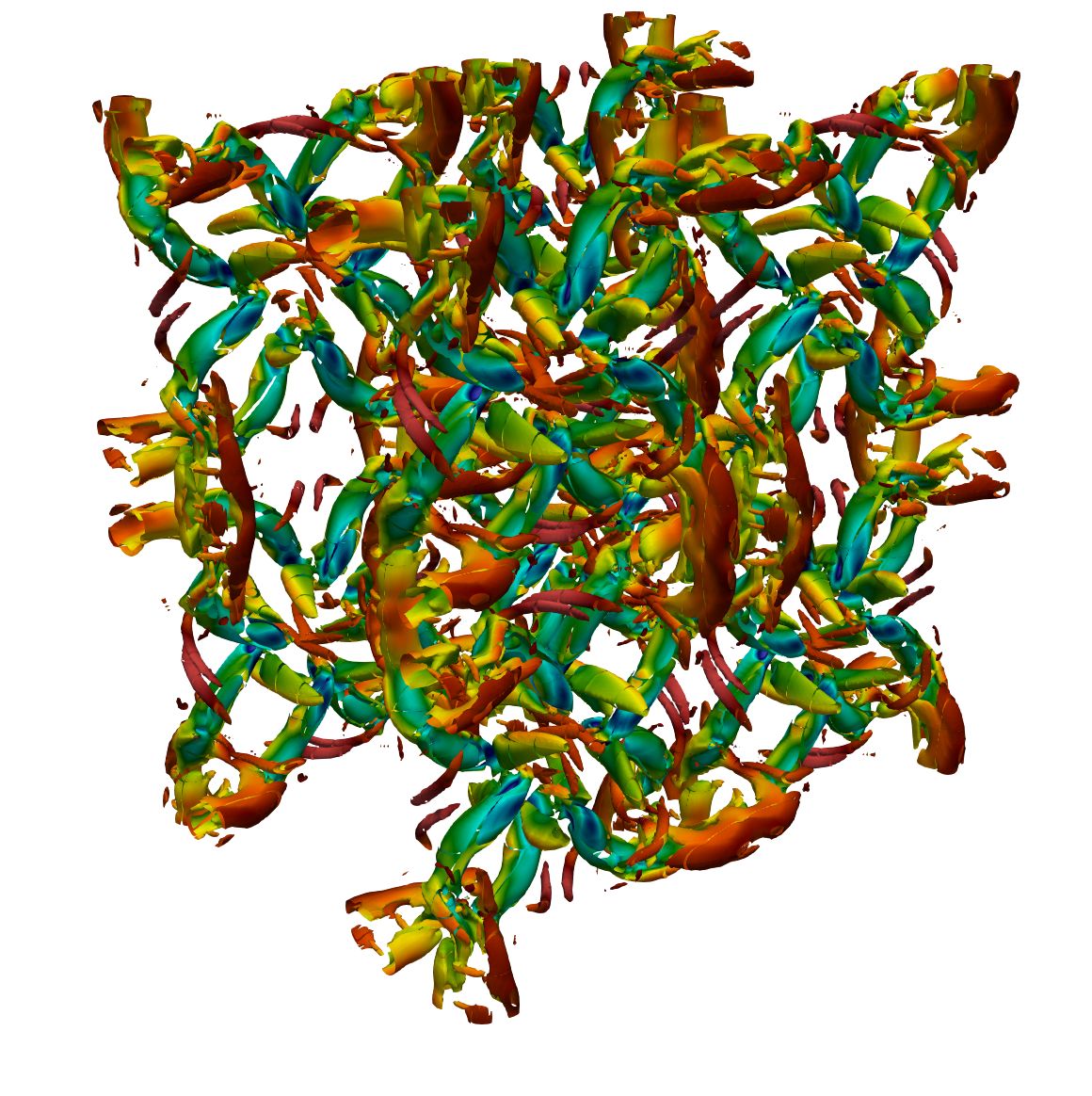

Visualising the Q-criterion of the Taylor-Green Vortex, coloured by pressure.

Xcompact3D is a high-order CFD code designed for simulating turbulent flows on supercomputers, with applications ranging from fundamental turbulence studies to simulating and optimising wind farm designs. It is parallelised using the 2DECOMP&FFT library which includes parallel I/O facilities via MPI-IO, supporting solution visualisation using common tools such as ParaView and a custom analysis framework, Py4Incompact3D, based on the same numerical methods used by Xcompact3D.

As computational power has increased, the rate at which data can be stored has not kept pace with the rate at which it can be generated, leading to the so-called “I/O bottleneck”. To address this in Xcompact3D, this project has implemented a new I/O system using ADIOS2. The ADIOS2 interface gives access to standardised parallel file formats including HDF5 and their own BP4/5 formats which will make it easier to work with data from Xcompact3D in different tools, it also enables configuring I/O options at runtime which will allow users to rapidly test and identify configurations that work best on their setup.

A further benefit of using ADIOS2 for I/O is that by abstracting the I/O step it can send the data stream to another ADIOS2 program directly rather than writing to disk. In this project ADIOS2 was also added to the Py4Incompact3D postprocessing library, allowing it to

- process data from the new formats that can now be written by Xcompact3D,

- receive data directly from a running Xcompact3D simulation without reading from disk, the latter point being attractive for large-scale HPC use as it avoids the “I/O bottleneck”. As Py4Incompact3D was originally written with workstation use in mind it first had to be parallelised to keep up with the stream of data being fed to it by an Xcompact3D simulation that may be running on O(10^5) or more cores. This was achieved by wrapping the 2DECOMP&FFT library which Xcompact3D is built on to provide the same parallelism approach in Py4Incompact3D. By parallelising Py4Incompact3D it becomes possible to analyse datasets which were previously impractical, either due to hitting memory limits or simply the time to process large amounts of data with a serial program.

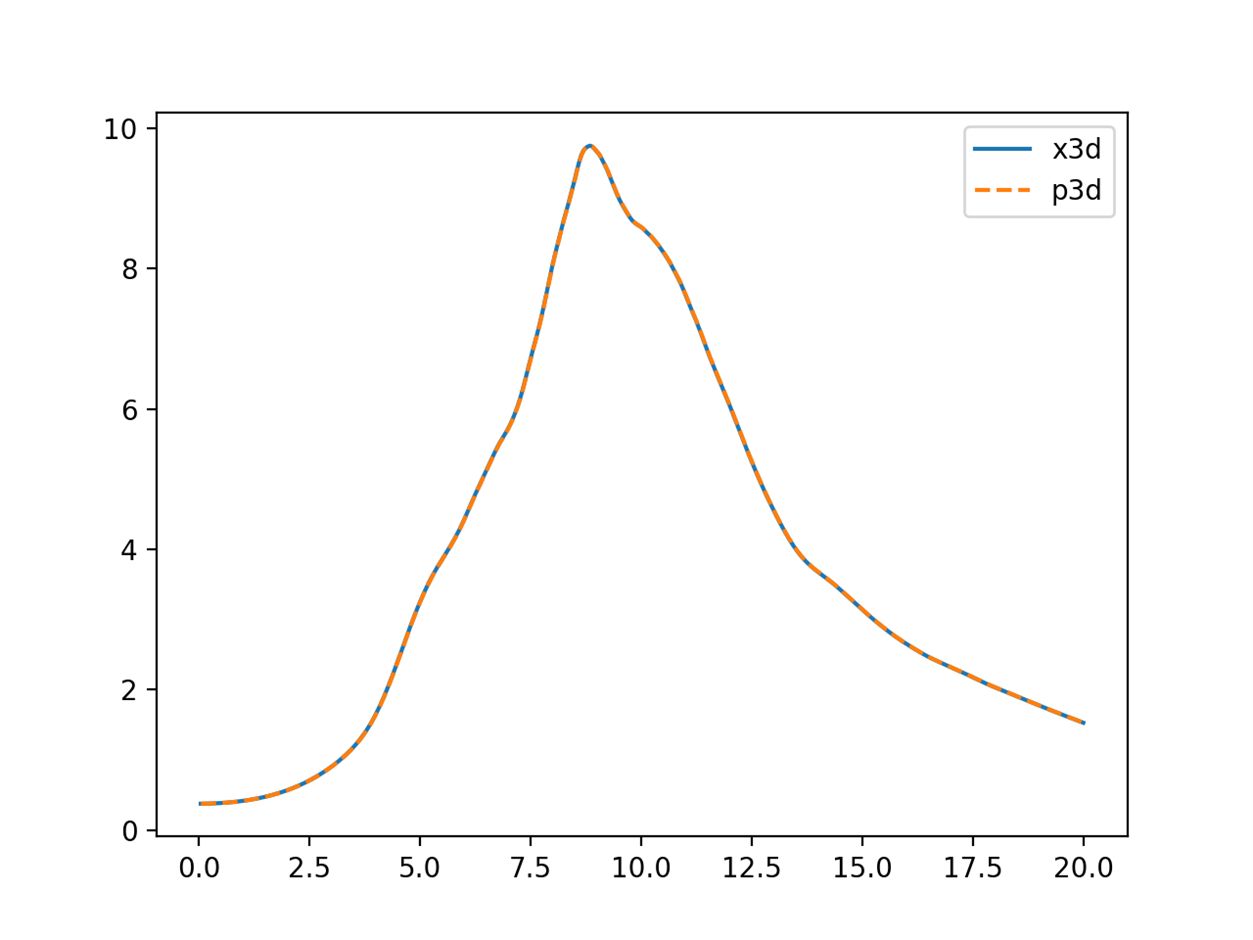

In testing it was found that ADIOS2’s BP4 format outperformed the existing MPI-IO solution in 2DECOMP&FFT/Xcompact3D which will be of immediate benefit to users who need only recompile their code if using the latest version of Xcompact3D to enable ADIOS2 I/O. However, some data must be recorded at very high frequency, resulting in impractical data volumes, both to store and later load and process. Such data must be processed “in-situ” to write only the reduced scientific data (typical examples are integral data such as the domain kinetic energy). Using the ADIOS2 data streaming interface (SST) we have demonstrated the capability to couple Xcompact3D and Py4Incompact3D on ARCHER2 to compute the per-timestep statistics for the Taylor Green Vortex example.

Figure 1 Comparing enstrophy computed in-situ by Xcompact3D and via Py4Incompact3D.

Information about the code

The ADIOS2 integration work has been merged into the Xcompact3d repository “master” branch and is available by cloning the main repository. The in-situ interface between Py4Incompact3D and Xcompact3D is currently available on the “dev-2decomp4py” branch (for Xcompact3D) and “dev/in-situ–wrap-2decomp&fft” branch (for Py4Incompact3D). This will be merged into the upstream repositories of Xcompact3D and Py4Incompact3D , respectively.

Technical Report

DOI

https://doi.org/10.5281/zenodo.7898885